This article delves into the intricacies of integrating Artificial Intelligence (AI) and Machine Learning (ML) capabilities within modern data architectures. We explore the evolution of data architectures to accommodate AI/ML workloads, discuss key components and design considerations, and examine the transformative impact of intelligent data pipelines and decision intelligence frameworks. This exploration is enriched with real-world examples and practical insights from a data architect's perspective.

The Rise of AI/ML in Data Architectures

The integration of AI and Machine Learning (AI/ML) into data architectures represents a significant shift from traditional data warehousing and business intelligence approaches. This evolution is driven by the unique characteristics of AI/ML workloads, which demand the ability to handle massive volumes and diverse types of data, accommodate iterative experimentation, and provide the necessary computational power for complex model training.

Traditional data architectures often struggle to meet these demands. However, modern architectures have emerged that effectively address these challenges by incorporating key components like scalable data lakes for managing vast amounts of raw data, distributed processing frameworks such as Apache Spark and Hadoop for efficient data processing and model training, and specialized hardware like GPUs and TPUs to accelerate computationally intensive tasks. Furthermore, the integration of ML platforms like TensorFlow, PyTorch, and scikit-learn facilitates streamlined model development and deployment.

This shift towards AI/ML-driven data architectures is not merely about adopting new technologies; it represents a fundamental change in how organizations leverage data for decision-making and innovation. By embracing the capabilities of AI/ML, businesses can unlock new insights, automate processes, and gain a competitive edge in today's data-driven world.

Traditional data architectures, primarily focused on data warehousing and business intelligence, often struggle to handle the unique demands of AI/ML workloads.

These workloads require:

- Massive data volumes and variety: AI/ML models thrive on large, diverse datasets encompassing structured, semi-structured, and unstructured data.

- Iterative experimentation: The process of developing, training, and deploying models is iterative, requiring flexibility and agility in the data architecture.

- Computational intensity: Training complex models demands significant computational power, often necessitating specialized hardware and distributed processing frameworks.

Modern data architectures address these challenges by incorporating:

- Scalable data lakes: To store and manage vast amounts of raw data in various formats.

- Distributed processing frameworks: Such as Apache Spark and Hadoop for efficient data processing and model training.

- Specialized hardware: Including GPUs and TPUs to accelerate computationally intensive tasks.

ML platforms: Like TensorFlow, PyTorch, and scikit-learn to facilitate model development and deployment.

Key Components and Design Considerations

Designing a data architecture capable of effectively supporting AI/ML initiatives necessitates a holistic approach that considers the entire lifecycle of data, from ingestion and preparation to model training, deployment, and monitoring. This section delves into the critical components that constitute such an architecture, emphasizing the intricate interplay between data management, processing capabilities, and model development. We will explore design considerations that are crucial for ensuring scalability, performance, security, and adaptability in the face of ever-evolving AI/ML demands. This includes addressing challenges related to data governance, ensuring efficient data access and retrieval, and fostering a flexible environment that can readily accommodate new technologies and analytical techniques. By carefully navigating these complexities, organizations can construct a robust foundation for leveraging AI/ML to drive innovation and achieve strategic objectives.

Integrating AI/ML into your data architecture requires careful consideration of several key components:

- Data Ingestion: Efficiently ingest data from diverse sources, including streaming data platforms, databases, and APIs.

- Data Preparation: Clean, transform, and prepare data for model training and inference. This includes feature engineering, data cleansing, and data validation.

- Model Training: Leverage scalable platforms and infrastructure for efficient model training and hyperparameter tuning.

- Model Deployment: Deploy models for real-time or batch inference, integrating them with applications and business processes.

- Model Monitoring and Management: Continuously monitor model performance, detect drift, and retrain models as needed.

Design Considerations

Designing a robust and future-proof data architecture for AI/ML requires careful consideration of various design aspects that go beyond simply selecting the right tools and technologies. These considerations must address the dynamic nature of AI/ML workloads, ensuring the architecture can adapt to evolving business requirements and technological advancements.

Data Governance: A cornerstone of any successful data architecture, data governance becomes even more critical in the context of AI/ML. Establishing clear policies for data quality, security, and compliance is crucial. This includes defining data ownership, lineage, and access control to ensure data integrity and regulatory compliance, especially when dealing with sensitive information used in model training.

Scalability and Performance: AI/ML workloads often involve processing massive datasets and running computationally intensive tasks. The architecture must be designed to scale horizontally, accommodating growing data volumes and processing demands without performance bottlenecks. This involves leveraging distributed computing frameworks, optimized data storage solutions, and efficient data pipelines to ensure smooth and timely execution of AI/ML tasks.

Security: Security is paramount when dealing with sensitive data and valuable AI/ML models. Implementing robust security measures throughout the data lifecycle is essential. This includes data encryption at rest and in transit, access control mechanisms to restrict unauthorized access, and regular security audits to identify and mitigate vulnerabilities.

Flexibility and Agility: The field of AI/ML is constantly evolving, with new algorithms, frameworks, and techniques emerging regularly. The architecture needs to be flexible and agile to adapt to these changes, allowing for easy integration of new technologies and experimentation with different approaches. This requires modular design, well-defined interfaces, and the ability to quickly deploy and test new models and pipelines.

Furthermore, effectively addressing these design considerations requires a deep understanding of the specific AI/ML use cases within the organization. This involves close collaboration between data architects, data scientists, and business stakeholders to align the architecture with business objectives and ensure it can effectively support the desired AI/ML applications.

Intelligent Data Pipelines

Intelligent data pipelines represent a significant evolution beyond traditional ETL (Extract, Transform, Load) processes, marking a paradigm shift in how data is ingested, processed, and delivered within modern data architectures. These pipelines leverage AI/ML capabilities to not only automate data workflows but also to optimize them dynamically, adapting to changing data characteristics and business requirements.

One of the key differentiators of intelligent data pipelines is their ability to dynamically adjust data processing based on the characteristics of the data itself. This means that the pipeline can automatically select the most appropriate data cleaning techniques, feature engineering methods, or data transformation rules based on the specific data being processed. For instance, if the pipeline detects a high degree of missing values in a particular dataset, it can automatically apply imputation techniques or choose a model that is robust to missing data.

Furthermore, intelligent data pipelines can predict and prevent data quality issues before they impact downstream processes. By leveraging machine learning models, these pipelines can identify potential anomalies, inconsistencies, or errors in the data, flagging them for review or automatically applying corrective actions. This proactive approach to data quality management ensures that only reliable and accurate data is used for analysis and decision-making.

Another crucial aspect of intelligent data pipelines is their ability to optimize data flow and resource allocation in real-time. By continuously monitoring performance metrics and workload demands, these pipelines can dynamically adjust resource allocation, prioritize critical data flows, and optimize processing tasks to ensure efficient utilization of infrastructure and minimize latency.

Key characteristics of intelligent data pipelines:

- Adaptive processing: Dynamically adjust data processing steps based on data characteristics.

- Predictive data quality: Proactively identify and prevent data quality issues.

- Automated metadata management: Automatically capture and manage metadata throughout the data lifecycle.

- Self-optimization: Continuously optimize data flow and resource allocation based on performance metrics.

- Integration with AI/ML platforms: Seamlessly integrate with AI/ML platforms for model training and deployment.

By incorporating these intelligent capabilities, data pipelines become more than just conduits for data movement; they become active participants in the data value chain, ensuring data quality, efficiency, and adaptability in the face of ever-growing data volumes and complexity. This empowers organizations to extract maximum value from their data assets and accelerate their AI/ML initiatives.

Scalable Data Platforms

Building and deploying effective AI/ML solutions requires more than just powerful algorithms and clean data. It demands a robust and scalable data platform capable of handling the unique challenges posed by these data-intensive workloads. This goes beyond simply storing large volumes of data; it's about creating an ecosystem that supports the entire AI/ML lifecycle, from data ingestion and preparation to model training, deployment, and monitoring.

Key characteristics of a scalable data platform for AI/ML:

-

- Support for diverse data formats and storage technologies: AI/ML workloads often involve diverse data types, including structured, semi-structured, and unstructured data. The platform should accommodate various data formats (CSV, JSON, Parquet, Avro, images, video, etc.) and offer flexibility in choosing appropriate storage technologies (relational databases, NoSQL databases, object storage, data lakes) based on the specific needs of each dataset.

-

- Efficient data access and retrieval: AI/ML workloads require efficient access to data for both batch processing (e.g., model training) and real-time processing (e.g., online inference). The platform should provide mechanisms for fast data retrieval, including optimized query engines, indexing strategies, and data partitioning techniques. -

- Tools for data exploration, analysis, and visualization: Before building models, data scientists need to understand the data. The platform should provide tools for data exploration, profiling, and visualization, enabling data scientists to identify patterns, uncover insights, and prepare data for model training. -

- Integration with popular AI/ML frameworks and tools: The platform should seamlessly integrate with popular AI/ML frameworks and tools like TensorFlow, PyTorch, scikit-learn, and Spark MLlib. This allows data scientists to leverage their preferred tools and frameworks without friction, streamlining model development and deployment. -

- Scalability and elasticity: AI/ML workloads can be highly demanding in terms of compute and storage resources. The platform should be able to scale horizontally to accommodate growing data volumes and processing needs. Cloud-based platforms offer inherent scalability and elasticity, allowing organizations to dynamically adjust resources based on demand. -

- Robust security and governance: Protecting sensitive data and valuable AI/ML models is crucial. The platform should provide robust security features, including access control, encryption, and auditing capabilities. It should also support data governance policies to ensure data quality, compliance, and responsible use of AI/ML. -

- Support for data versioning and lineage: Tracking data changes and model lineage is essential for reproducibility and debugging in AI/ML workflows. The platform should provide mechanisms for data versioning, allowing data scientists to track different versions of datasets and understand how data transformations impact model performance.

By incorporating these features, organizations can build a scalable and flexible data platform that empowers their AI/ML initiatives. This platform serves as the foundation for developing, deploying, and managing AI/ML models effectively, enabling data-driven decision-making and innovation across the organization.

Decision Intelligence

Decision Intelligence (DI) represents a significant advancement in leveraging data and AI to enhance human decision-making. It goes beyond simply providing data visualizations or predictive models; DI aims to create a framework where AI augments human intellect, enabling more informed, efficient, and effective decisions across all levels of an organization.

Here's a deeper dive into the core components and complexities of Decision Intelligence:

1. More than just Predictive Analytics: While predictive analytics forms a crucial foundation, DI extends its capabilities by incorporating prescriptive analytics. This means that instead of just forecasting future outcomes, DI systems recommend optimal actions based on data-driven insights and simulations. It considers various scenarios, constraints, and potential outcomes to guide decision-makers towards the best course of action.

2. Closing the Loop with Feedback and Learning: DI systems are designed to learn and adapt over time. They incorporate feedback mechanisms to continuously evaluate the outcomes of decisions, identify areas for improvement, and refine the underlying models and recommendations. This iterative learning process allows the system to become more intelligent and aligned with organizational goals.

3. Explainable AI (XAI) for Transparency and Trust: One of the crucial aspects of DI is the emphasis on explainable AI. This means that the system provides clear explanations for its recommendations, making the decision-making process transparent and building trust among users. XAI helps overcome the "black box" nature of some AI models, allowing users to understand the reasoning behind the suggestions and make informed judgments.

4. Human-centered Design and Collaboration: DI is not about replacing human decision-makers but empowering them with AI-driven insights. DI systems are designed with a human-centered approach, focusing on user experience and collaboration. They provide intuitive interfaces, visualizations, and tools that enable users to interact with data, explore different scenarios, and make informed decisions with confidence.

5. Addressing Complexities and Uncertainties: Real-world decision-making often involves complexities, uncertainties, and trade-offs. DI frameworks address these challenges by incorporating techniques like scenario planning, risk assessment, and optimization algorithms. They help decision-makers navigate complex situations, evaluate potential risks and rewards, and make informed choices even in uncertain environments.

Examples of DI in Action:

- Supply Chain Optimization: Predicting demand fluctuations, optimizing inventory levels, and recommending efficient logistics routes.

- Financial Risk Management: Identifying fraudulent transactions, assessing credit risk, and optimizing investment portfolios.

- Healthcare Diagnostics: Assisting doctors in diagnosing diseases, recommending personalized treatment plans, and predicting patient outcomes.

- Customer Relationship Management: Predicting customer churn, recommending personalized offers, and optimizing marketing campaigns.

By integrating data, AI, and human expertise, Decision Intelligence empowers organizations to make faster, more accurate, and consistent decisions. It fosters a data-driven culture, improves operational efficiency, and drives strategic advantage in today's complex and dynamic business environment.

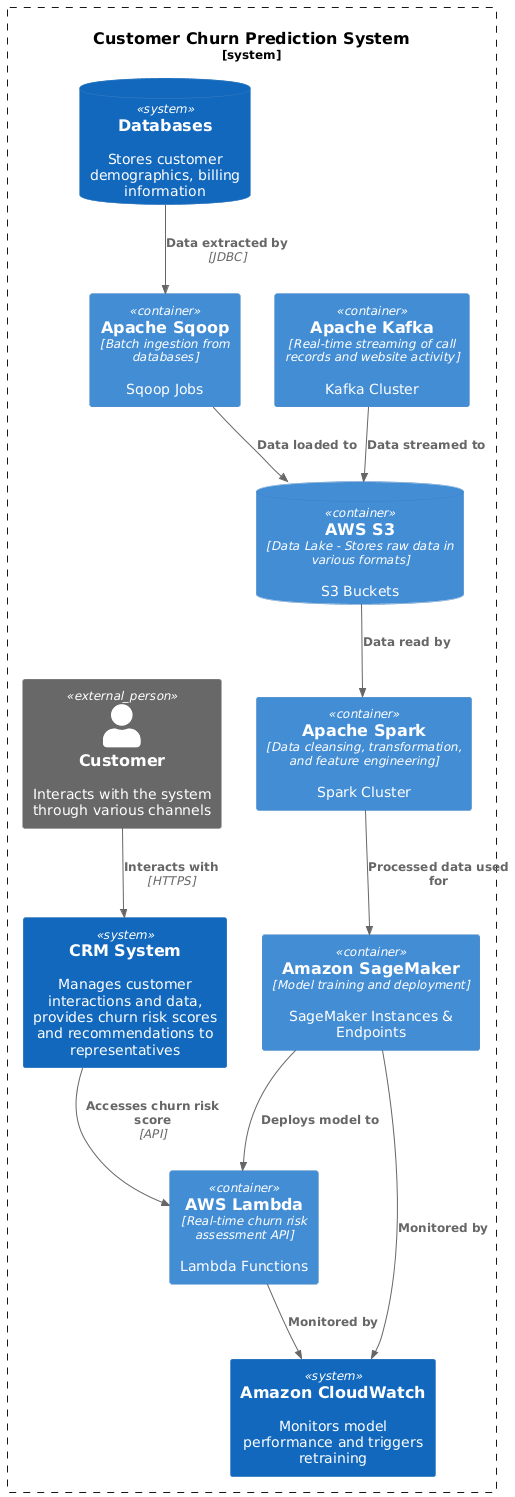

Example Architecture: Customer Churn Prediction

Consider a scenario where a telecommunications company wants to predict customer churn using AI/ML. A possible architecture could include:

Data Sources: Customer demographics, billing information, call records, and web browsing history from various databases and CRM systems.

Data Ingestion: Apache Kafka for real-time streaming of call records and website activity, combined with batch ingestion from databases using Apache Sqoop.

Data Lake: AWS S3 for storing raw data in various formats.

Data Processing: Apache Spark for data cleansing, transformation, and feature engineering.

Model Training: Amazon SageMaker for training a churn prediction model using a gradient boosting algorithm.

Model Deployment: Deploy the trained model as a real-time API using AWS Lambda for immediate churn risk assessment upon customer interaction.

Model Monitoring: Amazon CloudWatch for monitoring model performance and triggering retraining when necessary.

Decision Intelligence: Integrate the churn prediction API with a customer relationship management (CRM) system to provide customer service representatives with real-time churn risk scores and recommended actions to retain at-risk customers.

Component Choice Rationale:

Kafka: Chosen for its ability to handle high-volume, real-time data streams.

S3: Selected for its scalability, durability, and cost-effectiveness.

Spark: Utilized for its distributed processing capabilities and rich ecosystem for data manipulation.

SageMaker: Leveraged for its comprehensive suite of tools for model training, deployment, and monitoring.

Lambda: Chosen for its serverless architecture and ability to scale automatically based on demand.

CloudWatch: Integrated for its comprehensive monitoring capabilities and integration with other AWS services.

Final Thoughts

Integrating AI/ML into data architectures is no longer a luxury but a necessity for organizations seeking to extract maximum value from their data. By carefully considering the key components, design considerations, and emerging trends like intelligent data pipelines and decision intelligence, data architects can build robust, scalable, and future-proof solutions that empower data-driven decision-making and drive business innovation.

This journey requires a shift in mindset, embracing agility, experimentation, and continuous learning. As AI/ML technologies continue to evolve at a rapid pace, data architects must stay abreast of the latest advancements and adapt their strategies accordingly. The rewards, however, are significant – unlocking the true potential of data to transform businesses and shape the future.