The rise of serverless computing has revolutionized how we design, deploy, and manage data architectures. This article delves into the core concepts of serverless data architectures, exploring their key components, benefits, and challenges. We'll examine real-world use cases and provide practical insights into building robust and scalable data solutions using serverless technologies. This exploration is geared towards experienced data professionals, including data engineers, data scientists, and data architects, seeking to leverage the agility and efficiency of serverless computing in their data-driven initiatives.

Introduction: The Serverless Paradigm Shift

Traditional data architectures often rely on dedicated infrastructure, requiring significant upfront investment and ongoing maintenance. Serverless architectures, however, offer a compelling alternative by abstracting away the underlying infrastructure management. This allows data professionals to focus on core data tasks – processing, analysis, and visualization – rather than server provisioning and configuration.

The core principle of serverless is the execution of code in stateless compute containers, triggered by events. This event-driven architecture enables dynamic scaling, adapting to fluctuating workloads and optimizing resource utilization. By eliminating the need for server management, serverless architectures offer compelling advantages in terms of cost-efficiency, scalability, and developer productivity.

Key Components of a Serverless Data Architecture

A typical serverless data architecture comprises several key components:

Data Sources: These can range from traditional databases to streaming platforms and cloud storage services. Examples include:

- Relational databases like Amazon Aurora Serverless

- NoSQL databases like DynamoDB

- Data streams like Amazon Kinesis

- Cloud storage like AWS S3

Data Ingestion: Services like AWS Lambda can be triggered by events in data sources to ingest and transform data. This allows for real-time data processing and loading into data stores.

Data Processing: Serverless compute services, such as AWS Lambda or Google Cloud Functions, provide the engine for data transformation, enrichment, and analysis. These services can be orchestrated using workflow tools like AWS Step Functions.

Data Storage: Serverless databases, data warehouses, and data lakes offer scalable and cost-effective storage solutions. Services like Amazon Redshift Serverless, Google BigQuery, and AWS S3 provide options for various data storage needs.

Data Analytics and Visualization: Serverless architectures facilitate data analysis and visualization through tools like Amazon Athena, which enables querying data in S3 using SQL, and integration with visualization platforms like Tableau or Power BI.

Benefits of Serverless Data Architectures

Serverless architectures have emerged as a game-changer in the realm of data management, offering a compelling array of benefits that address the evolving needs of data-driven organizations. These benefits extend beyond mere cost savings and delve into the core of agility, scalability, and operational efficiency.

1. Cost Optimization

Pay-as-you-go Pricing: One of the most significant advantages of serverless is the pay-as-you-go pricing model. This eliminates the need for upfront investments in infrastructure and allows organizations to pay only for the actual compute time consumed. This granular pricing model aligns costs directly with usage, optimizing spending and avoiding unnecessary expenses, particularly for applications with variable workloads or unpredictable demand patterns.

Reduced Operational Costs: By offloading infrastructure management to the cloud provider, organizations can significantly reduce operational costs associated with server maintenance, patching, and upgrades. This frees up valuable resources and allows IT teams to focus on strategic initiatives rather than routine maintenance tasks.

2. Enhanced Scalability and Elasticity

Automatic Scaling: Serverless platforms excel at automatically scaling resources up or down in response to real-time demand. This dynamic scaling ensures optimal performance even during peak loads, preventing performance bottlenecks and ensuring a seamless user experience. This elasticity is particularly crucial for applications that experience unpredictable traffic spikes or seasonal variations.

Fine-grained Resource Allocation: Serverless architectures enable fine-grained control over resource allocation, allowing organizations to tailor computing resources to the specific needs of each function or workload. This granular control optimizes resource utilization and avoids over-provisioning, further contributing to cost efficiency.

3. Accelerated Development and Deployment

Increased Agility: Serverless architectures promote agility by streamlining development and deployment processes. With no servers to manage, developers can focus on writing code and deploying applications faster. This accelerated development lifecycle enables quicker iterations, faster time-to-market, and a more responsive approach to changing business requirements.

Simplified Operations: The serverless paradigm simplifies operational complexities by abstracting away infrastructure management. This allows developers to focus on building and deploying applications without worrying about server provisioning, configuration, or scaling. This reduced operational overhead translates to increased developer productivity and faster innovation cycles.

4. Enhanced Focus on Core Business Logic

Empowering Data Professionals: Serverless architectures empower data professionals, such as data scientists and data engineers, to dedicate more time to their core competencies – analyzing data, building models, and extracting valuable insights. By eliminating the burden of infrastructure management, serverless frees up these professionals to focus on higher-value tasks that drive business outcomes.

Accelerated Innovation: By removing the constraints of traditional infrastructure, serverless fosters a culture of innovation. Data teams can experiment with new ideas, explore different approaches, and rapidly prototype solutions without being hindered by infrastructure limitations. This accelerated innovation cycle can lead to the development of new products, services, and data-driven strategies.

5. Improved Application Resilience

Fault Tolerance: Serverless architectures inherently promote fault tolerance by distributing workloads across multiple availability zones and regions. This distributed nature minimizes the impact of infrastructure failures and ensures high availability for applications.

Disaster Recovery: Serverless platforms offer built-in disaster recovery capabilities, making it easier to recover from unexpected events or outages. The ability to quickly restore applications and data minimizes downtime and ensures business continuity.

Challenges and Considerations in Serverless Data Architectures

While serverless data architectures offer a compelling vision for the future of data management, it's crucial to approach them with a realistic understanding of the challenges and considerations they present. These challenges, while not insurmountable, require careful planning, diligent execution, and a deep understanding of the serverless paradigm.

Vendor Lock-in

Proprietary Services: One of the most significant concerns with serverless architectures is the potential for vendor lock-in. Cloud providers offer a diverse range of serverless services, many of which are proprietary and not easily portable to other platforms. This can create a dependency on a specific vendor, making it challenging and costly to migrate to another cloud provider in the future.

Mitigation Strategies: To mitigate vendor lock-in, organizations should carefully evaluate their long-term cloud strategy and consider adopting open-source tools and frameworks wherever possible. Designing applications with well-defined interfaces and abstracting away vendor-specific dependencies can also help reduce the risk of lock-in.

Cold Starts and Latency

Function Initialization: Serverless functions, by their nature, are stateless and ephemeral. This means that they are initialized on demand when triggered by an event. This initialization process, known as a "cold start," can introduce latency, especially for functions that haven't been invoked recently. This latency can be detrimental to applications that require real-time responsiveness.

Optimization Techniques: Several techniques can be employed to minimize cold start latency, including optimizing function code, reducing dependencies, using provisioned concurrency, and keeping functions "warm" through periodic invocations. However, it's essential to be aware of this potential latency and design applications accordingly.

Debugging and Monitoring Complexities

Distributed Tracing: Debugging and monitoring serverless applications can be more complex compared to traditional monolithic applications. The distributed nature of serverless architectures, with functions interacting across various services, can make it challenging to trace execution flows and identify the root cause of issues.

Observability Tools: Cloud providers offer observability tools and services to help monitor serverless applications, but effectively utilizing these tools requires a deep understanding of the serverless environment and the ability to correlate logs, metrics, and traces across different services. Implementing robust logging and tracing strategies is crucial for effective debugging and monitoring.

Security Considerations

Shared Responsibility Model: Security in serverless environments follows a shared responsibility model. While cloud providers are responsible for securing the underlying infrastructure, organizations are responsible for securing their1 applications and data. This requires a clear understanding of security best practices and the implementation of appropriate security controls.

Security Best Practices: Key security considerations include access control, data encryption, secure configuration of serverless functions, and vulnerability management. Organizations should adopt a security-first approach and integrate security considerations throughout the development lifecycle.

State Management

Stateless Nature: Serverless functions are inherently stateless, meaning they don't retain information between invocations. This statelessness can pose challenges for applications that require stateful operations or data persistence.

External State Management: To manage state in serverless applications, developers often rely on external services like databases, caches, or message queues. Choosing the appropriate state management solution and integrating it effectively with serverless functions is crucial for building robust and scalable applications.

Operational Challenges

Function Limits: Serverless platforms impose limits on function execution time, memory allocation, and deployment package size. These limits can constrain application design and require careful optimization to ensure efficient operation within these constraints.

Vendor-specific Tools: Managing and operating serverless applications often involves using vendor-specific tools and services. This can create a learning curve for developers and operational teams and require adaptation to different tools and workflows across different cloud providers.

In conclusion, while serverless data architectures offer significant advantages, it's essential to acknowledge and address the inherent challenges they present. By carefully considering these challenges and adopting appropriate mitigation strategies, organizations can successfully leverage the power of serverless to build robust, scalable, and secure data solutions that meet their evolving business needs.

Use Cases and Examples

Serverless architectures are well-suited for a variety of data-intensive applications:

- Real-time Data Analytics: Processing and analyzing streaming data from IoT devices, social media feeds, or financial markets.

- ETL Pipelines: Building efficient and scalable extract, transform, and load (ETL) pipelines for data integration and warehousing.

- Machine Learning: Training and deploying machine learning models using serverless compute platforms.

- Data APIs: Creating serverless APIs for data access and retrieval.

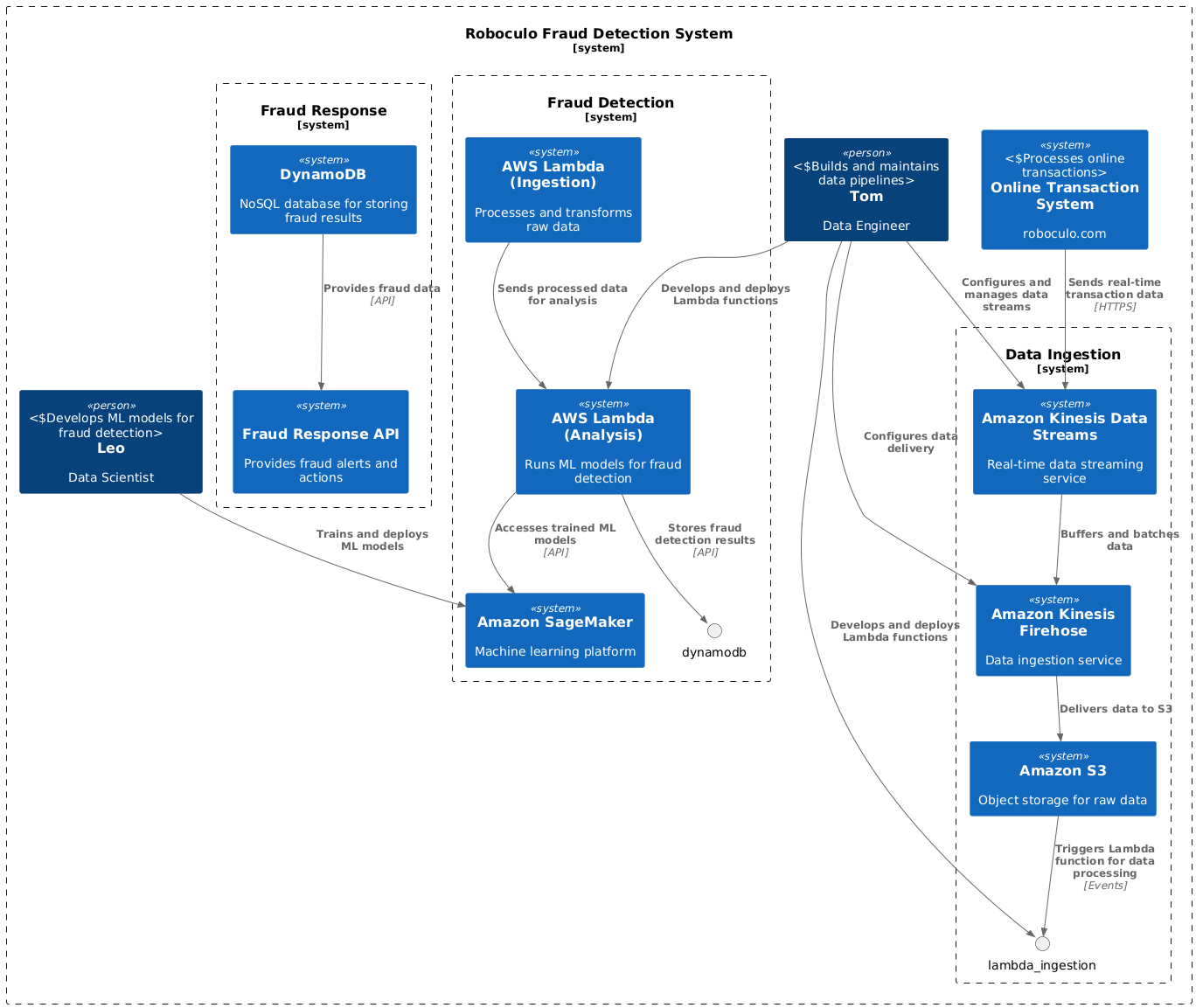

Example: Consider a real-time fraud detection system. Data streams from online transactions can be ingested using Kinesis, processed and analyzed by Lambda functions running machine learning models, and results stored in DynamoDB for immediate action. This serverless architecture enables scalability, low latency, and cost-effective processing of high-volume transaction data.

Best Practices for Serverless Data Architectures

To truly harness the power and potential of serverless computing for data-intensive applications, adhering to best practices is essential. These best practices ensure efficiency, scalability, maintainability, and security, enabling you to build robust and future-proof data solutions.

1. Design for Microservices

Decomposition: Break down your application into smaller, independent functions, each responsible for a specific task. This promotes modularity, making it easier to develop, test, deploy, and maintain individual components.

Loose Coupling: Minimize dependencies between functions. This allows for independent scaling and reduces the impact of failures in one function on others.

API-driven Communication: Use well-defined APIs for communication between functions. This promotes interoperability and allows for flexibility in choosing the underlying implementation of each function.

Example: In an ETL pipeline, you could have separate functions for data extraction, transformation, and loading. Each function can be developed and deployed independently, allowing for easier updates and scaling based on the specific needs of each stage.

2. Optimize Function Performance

Minimize Execution Time: Reduce the amount of work performed within each function to minimize execution time. This directly impacts cost and performance.

Reduce Dependencies: Minimize the number of external libraries and dependencies used within functions. This reduces the size of the deployment package and improves cold start times.

Right-size Memory Allocation: Choose the appropriate memory allocation for your functions. Over-provisioning memory can lead to unnecessary costs, while under-provisioning can impact performance.

Example: If a function is performing a simple data transformation, ensure the code is optimized and avoid unnecessary computations. Use built-in libraries where possible and choose the appropriate memory allocation based on the function's requirements.

3. Implement Robust Monitoring and Logging

Centralized Logging: Use a centralized logging service to collect and analyze logs from all your serverless functions. This provides a holistic view of your application's behavior and facilitates troubleshooting.

Structured Logging: Use structured logging formats (e.g., JSON) to make it easier to query and analyze logs.

Distributed Tracing: Implement distributed tracing to track requests as they flow through your serverless architecture. This helps identify performance bottlenecks and understand the interactions between different functions.

Example: Integrate your Lambda functions with a service like AWS CloudWatch Logs. Configure your functions to emit structured logs containing relevant information like timestamps, request IDs, and error messages. Use AWS X-Ray to trace requests across multiple functions and services.

4. Secure Your Data

Principle of Least Privilege: Grant functions only the necessary permissions to access resources. Avoid granting excessive permissions that could be exploited in case of a security breach.

Encryption: Encrypt sensitive data at rest and in transit. Use services like AWS KMS to manage encryption keys.

Secure Configuration: Securely store and manage API keys, secrets, and other sensitive configuration data. Use services like AWS Secrets Manager to store and retrieve secrets securely.

API Gateway Authorization: Use API Gateway to authorize access to your serverless functions. Implement authentication and authorization mechanisms to control access to your data and APIs.

Example: If a Lambda function needs to access data in an S3 bucket, grant it read-only access to that specific bucket instead of granting broader permissions. Encrypt data stored in S3 and use IAM roles to manage access to your Lambda functions.

5. Manage State Effectively

Externalized State: Store application state in external services like databases (e.g., DynamoDB), caches (e.g., Redis), or message queues (e.g., SQS). This ensures data persistence and allows functions to access stateful information.

Idempotent Operations: Design functions to be idempotent, meaning they can be executed multiple times without unintended side effects. This is crucial for handling retries and ensuring data consistency.

Example: If a function needs to track the progress of a data processing job, store the job status in a DynamoDB table. Design the function to handle potential retries by checking the job status before performing any actions.

Final Thoughts

Serverless data architectures represent a significant evolution in data management. By embracing the serverless paradigm, organizations can unlock new levels of agility, scalability, and cost-efficiency in their data initiatives. While challenges exist, careful planning, adherence to best practices, and a deep understanding of serverless technologies can pave the way for building robust and future-proof data solutions. As data continues to grow in volume and complexity, serverless architectures will undoubtedly play an increasingly critical role in shaping the future of data engineering and data science.