The data lake concept has evolved significantly. This article provides a technical deep dive into data lakes, exploring their architecture, benefits, and challenges. We'll discuss data management, compare data lakes with traditional databases, and debunk common misconceptions. This is essential reading for experienced data engineers, data scientists, and data architects looking to leverage the power of this data organization approach.

I hear more and more about how people would like to use or are already using a data lake, but having in mind that not all of them are taking fundamental concepts and have justification for it, I created this article to make the data lake concept closer to a reader.

Introduction to the Data Lake

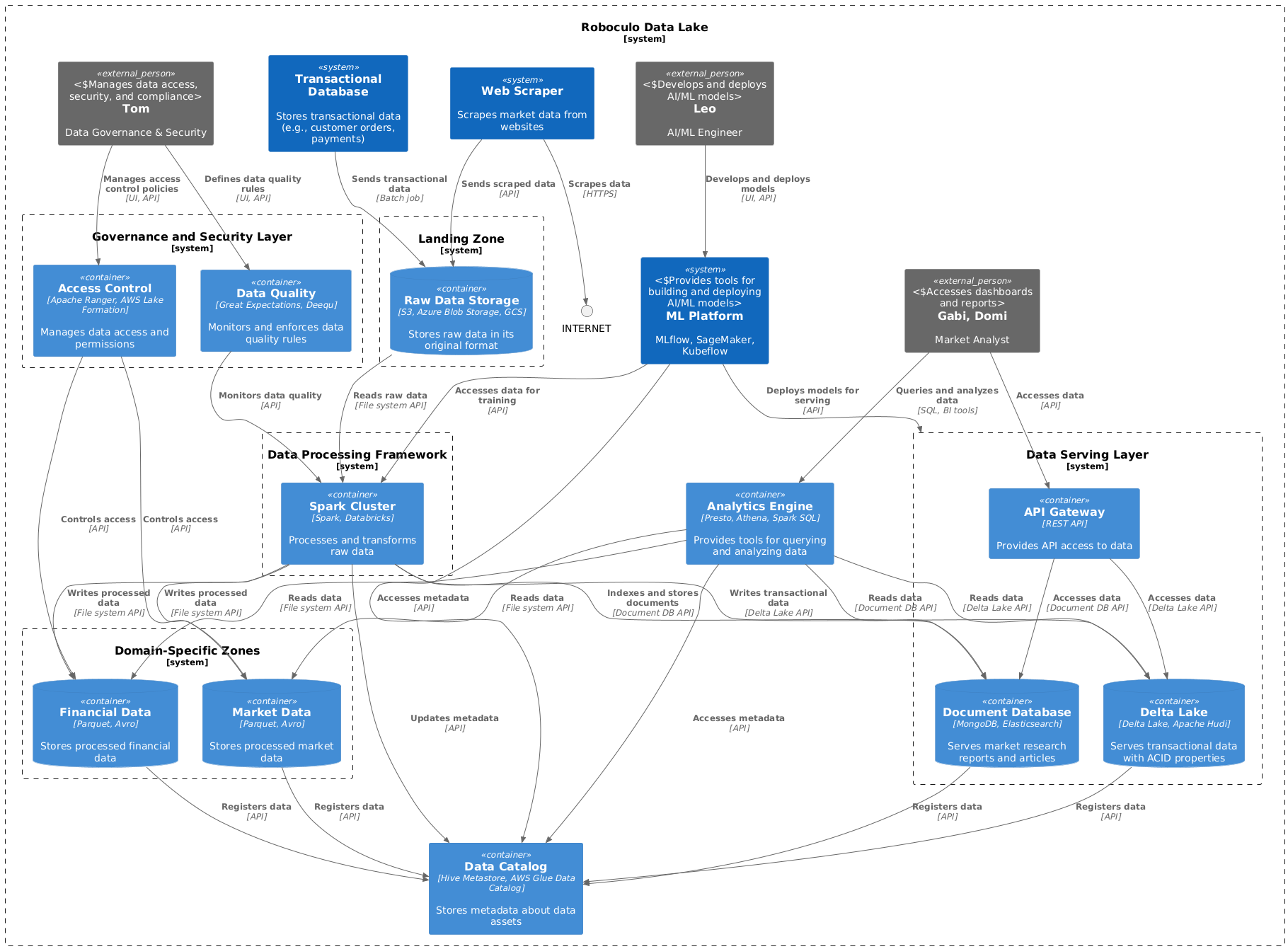

The traditional data lake, while powerful, often suffers from a lack of structure and organization. This can lead to the "data swamp" scenario, where finding and utilizing relevant data becomes a significant challenge. Modern data lakes address these issues by employing techniques like data cataloging, metadata management, and domain-specific zones for better organization.

Think of a large organization with departments like marketing, sales, and finance. Each department generates and consumes data relevant to its specific functions. A well-structured data lake would have separate, dedicated areas for each of these domains, allowing for better data governance, security, and discoverability.

Key Components of a Data Lake

A robust and effective data lake architecture relies on the seamless integration of several key components. These components work together to ensure data is ingested, stored, processed, and accessed efficiently and securely.

Let's delve deeper into each of them:

-

Landing Zone: This serves as the entry point for all raw data entering the data lake. Think of it as a staging area where data from diverse sources, such as databases, CRM systems, social media feeds, and IoT devices, lands in its original format. This raw data is often unstructured or semi-structured and may contain inconsistencies or errors. The landing zone allows for the initial capture of data without immediate transformation, preserving its original state for future analysis or auditing.

-

Domain-Specific Zones: To avoid creating a chaotic "data swamp," data lakes employ domain-specific zones. These are dedicated areas within the lake designed to house curated and processed data related to particular business domains, such as marketing, sales, finance, or customer service. By organizing data in this way, data lakes promote better data governance, improve data discoverability, and simplify access control. Data within these zones is typically structured and transformed into formats suitable for analysis and reporting.

-

Data Catalog: Imagine a library catalog that not only lists books but also provides detailed information about their content, authors, and publication dates. A data catalog in a data lake serves a similar purpose. It acts as a central repository of metadata, providing crucial information about the data assets within the lake. This includes details about the data's origin, schema, data types, quality, and ownership. A well-maintained data catalog is essential for data discovery, enabling users to easily find and understand the data they need. It also plays a vital role in data governance and compliance efforts.

-

Data Processing Framework: This component encompasses the tools and technologies responsible for the heavy lifting of data ingestion, transformation, cleaning, and processing. Popular frameworks like Apache Spark, Apache Flink, and cloud-based data processing services (e.g., AWS Glue, Azure Data Factory) provide the necessary capabilities for handling large volumes of data and performing complex transformations. These frameworks enable data engineers to build efficient data pipelines that move data from the landing zone to the domain-specific zones, ensuring data is refined and prepared for analysis.

-

Governance and Security Layer: Protecting data integrity, ensuring compliance with regulations, and controlling data access are critical in any data environment. The governance and security layer in a data lake provides the necessary mechanisms to achieve these goals. This layer includes tools and policies for data quality management, access control, encryption, and auditing. It ensures that data is used responsibly and ethically while protecting it from unauthorized access and potential breaches. Tools like Apache Ranger and AWS Lake Formation are commonly used to implement fine-grained access control and data masking policies.

Data Management in a Data Lake

Here are a few words about the data management key concepts:

Immutability and Append-Only: Data in a data lake is often stored in an immutable and append-only fashion, meaning that existing data cannot be modified. This ensures data lineage and facilitates auditing.

Deleting Data:

- Soft Deletes: Instead of physically deleting data, it's often marked as deleted through metadata or versioning.

- Hard Deletes: Physical deletion requires locating and removing files, which can be complex. Compliance regulations often necessitate tools like Apache Ranger or AWS Lake Formation for secure data deletion.

Updating Data:

- Append and Versioning: New data with updates is appended, and versioning is used to track changes.

- Delta Lake and Apache Hudi: These frameworks provide ACID properties on top of the data lake, enabling efficient updates and deletes without compromising data integrity.

Data Lake vs. Traditional Databases

Key Differences:

- CRUD Operations: Traditional databases excel at CRUD operations, while data lakes prioritize immutability and append-only storage.

- ATOMIC Transactions: While not inherent to data lakes, frameworks like Delta Lake bring ACID properties to the table.

- Procedures and UDFs: Traditional databases offer built-in support for stored procedures and user-defined functions (UDFs), while data lakes rely on external processing frameworks.

Backup and High Availability in Data Lakes

Ensuring the continuous availability of data and the ability to recover from failures is paramount for any data lake implementation. This involves a two-pronged approach: robust backup mechanisms and high availability architecture.

Backup

Data lakes, especially those dealing with large volumes of critical data, require comprehensive backup strategies to protect against data loss due to hardware failures, accidental deletions, or even malicious attacks.

- Cloud-based Data Lakes: Cloud providers like AWS, Azure, and GCP offer built-in backup and disaster recovery capabilities for their data lake storage solutions. These services often provide automated backups, versioning, and replication across multiple availability zones or regions. This ensures data durability and allows for quick recovery in case of outages.

Example: In AWS, you can use S3 versioning to automatically keep multiple versions of an object. If a file is accidentally deleted or corrupted, you can easily restore a previous version. You can also configure cross-region replication to copy data to another region for disaster recovery.

- On-premise Data Lakes: For on-premise data lakes, establishing a robust backup system requires a more hands-on approach. This typically involves regular snapshots of the data lake, data replication to secondary storage, and potentially the use of third-party backup solutions.

Example: Using a tool like hdfs dfs -get in Hadoop, you can create snapshots of your HDFS data lake. These snapshots can be stored on separate storage devices or even replicated to a remote location for added security.

High Availability

High availability focuses on minimizing downtime and ensuring continuous access to data even in the face of failures.

- Cloud-based Data Lakes: Cloud providers excel at providing high availability through their infrastructure. They offer redundant storage and compute resources across multiple availability zones or regions. This ensures that if one component fails, another can take over seamlessly.

Example: Azure Data Lake Storage Gen2 offers high availability through locally redundant storage (LRS) and geo-redundant storage (GRS). LRS replicates data within a single region, while GRS replicates it to a secondary region, providing higher durability and disaster recovery capabilities.

- On-premise Data Lakes: Achieving high availability in on-premise data lakes requires careful architecture design. This often involves deploying redundant hardware, configuring data replication across multiple servers, and implementing failover mechanisms to automatically switch to backup systems in case of failures.

Example: In a Hadoop cluster, you can configure NameNode High Availability (HA) to have a standby NameNode that can take over if the active NameNode fails. This ensures that the cluster remains operational even if a critical component goes down.

By implementing a combination of robust backup and high availability measures, organizations can ensure the resilience and reliability of their data lakes, safeguarding their valuable data assets and ensuring business continuity.

The Data Swamp: Understanding and Avoiding the Pitfalls

While data lakes offer immense potential, they can devolve into "data swamps" if not managed effectively.

A data swamp is characterized by a lack of organization, poor data quality, and difficulty finding and accessing relevant information.

Why Data Swamps Happen:

- Lack of Governance: Without clear data governance policies and procedures, data can be ingested without proper metadata, quality checks, or access controls.

- Poor Data Quality: Inconsistent data formats, missing values, and duplicate records can accumulate, making it difficult to trust the data.

- Lack of Metadata Management: Without a comprehensive data catalog and metadata management, it becomes challenging to discover and understand data assets.

- Inadequate Data Architecture: Poorly designed data lakes, without clear zones or structures, can lead to a chaotic organization of data

Avoiding the Data Swamp:

- Establish Clear Data Governance: Implement data governance policies, procedures, and roles to ensure data quality, consistency, and security.

- Prioritize Data Quality: Implement data quality checks and cleansing processes at each stage of the data pipeline.

- Invest in Metadata Management: Build a comprehensive data catalog with rich metadata to facilitate data discovery and understanding.

- Design a Well-Structured Data Lake: Organize data into domain-specific zones and use appropriate file formats and storage technologies.

- Embrace Automation: Automate data ingestion, processing, and quality checks to reduce manual effort and improve efficiency.

When is a Data Swamp Acceptable (or even Desirable)?

While generally undesirable, there are specific scenarios where a data swamp might be a temporary or even intentional state:

- Early-stage Exploration: In the initial stages of a project, when the data landscape is still unclear, a data swamp might be tolerated to facilitate rapid data exploration and hypothesis generation.

- Low-value Data: If the data has low business value or is not critical for decision-making, the strict organization and quality controls of a data lake might not be justified.

- Cost Constraints: Implementing a fully governed and organized data lake can be expensive. In situations with limited resources, a data swamp might be accepted as a temporary trade-off.

However, it's crucial to remember that even in these scenarios, a plan should be in place to eventually evolve the data swamp into a more structured and governed data lake to avoid long-term challenges.

By understanding the causes and consequences of data swamps and implementing preventive measures, organizations can ensure their data lakes remain valuable assets that drive insights and informed decision-making.

Real-life Examples of Data Lakes in Action

Data lakes have become essential tools for organizations across various industries, enabling them to harness the value of their data for diverse purposes. Let's explore some real-life examples in greater detail:

E-commerce

In the highly competitive world of e-commerce, data is king. Data lakes provide a centralized repository for capturing and analyzing a wide range of data, including:

- Customer Data: This includes demographics, purchase history, browsing behavior, product reviews, and social media interactions.

- Product Information: Details about products, such as descriptions, prices, inventory levels, and supplier information.

- Order History: Records of all transactions, including order details, shipping information, and payment data.

- Website Traffic Logs: Information about website visitors, including page views, clickstream data, and referral sources.

By analyzing this data, e-commerce companies can:

- Personalize Recommendations: Offer customers tailored product recommendations based on their past purchases and browsing history.

- Target Marketing Campaigns: Segment customers and deliver targeted marketing messages based on their demographics, interests, and purchase behavior.

- Optimize Inventory: Predict demand for products and optimize inventory levels to minimize stockouts and overstocking.

- Improve Customer Service: Identify and address customer pain points by analyzing customer feedback and support interactions.

Healthcare

Data lakes are transforming the healthcare industry by enabling the integration and analysis of vast amounts of data from various sources:

- Patient Records: Electronic health records (EHRs), including medical history, diagnoses, treatments, and lab results.

- Clinical Trial Data: Data collected from clinical trials, including patient demographics, treatment protocols, and outcomes.

- Medical Imaging: X-rays, MRIs, and other medical images.

- Genomic Data: Information about patients' genetic makeup.

- Claims Data: Data related to healthcare claims and insurance.

With a data lake, healthcare organizations can:

- Facilitate Research: Analyze large datasets to identify trends, patterns, and insights that can lead to new treatments and cures.

- Improve Diagnostics: Develop more accurate diagnostic tools by leveraging machine learning algorithms trained on large datasets of patient data and medical images.

- Enable Personalized Medicine: Tailor treatments to individual patients based on their unique genetic makeup and medical history.

- Enhance Public Health: Track and analyze disease outbreaks, monitor public health trends, and develop effective prevention strategies.

Financial Services

Financial institutions rely on data lakes to manage and analyze vast quantities of data for various purposes:

- Transaction Data: Records of all financial transactions, including deposits, withdrawals, transfers, and payments.

- Market Data: Real-time and historical data on stocks, bonds, currencies, and other financial instruments.

- Customer Profiles: Detailed information about customers, including their financial history, risk profile, and investment preferences.

- Regulatory Data: Data related to compliance with financial regulations.

By leveraging data lakes, financial institutions can:

- Detect Fraud: Identify suspicious transactions and patterns that may indicate fraudulent activity.

- Manage Risk: Assess and manage financial risk by analyzing market data, customer profiles, and economic indicators.

- Develop Investment Strategies: Use historical and real-time data to develop and optimize investment strategies.

- Improve Customer Service: Gain a deeper understanding of customer needs and preferences to provide personalized financial advice and services.

These examples highlight the versatility and power of data lakes in enabling organizations to extract valuable insights from their data, drive innovation, and gain a competitive edge.

Software and Platforms for Building Data Lakes

Building a data lake involves choosing the right software and platforms to support data storage, processing, management, and analysis. Here's a breakdown of popular options, categorized by deployment model and licensing:

Cloud-Based

AWS: Amazon Web Services offers a comprehensive suite of services for building and managing data lakes.

- Amazon S3: A highly scalable and durable object storage service for storing raw and processed data.

- AWS Glue: A serverless data integration service that simplifies data discovery, ETL (extract, transform, load) processes, and data cataloging.

- Amazon Athena: An interactive query service that allows you to analyze data in S3 using standard SQL.

- AWS Lake Formation: A service that helps you build, secure, and manage data lakes, simplifying data access control and governance.

- Amazon EMR: A managed Hadoop framework for running big data processing applications like Spark and Hive.

- Licensing: Pay-as-you-go pricing based on usage.

Azure: Microsoft Azure provides a robust platform for data lake implementation with integrated services.

- Azure Data Lake Storage Gen2: A highly scalable and cost-effective data lake storage service built on Azure Blob Storage.

- Azure Synapse Analytics: A limitless analytics service that brings together data integration, enterprise data warehousing and big data analytics.

- Azure Databricks: A managed Spark service for large-scale data processing and machine learning.

- Azure Data Factory: A visual data integration tool for creating ETL and ELT pipelines.

- Licensing: Pay-as-you-go pricing based on usage.

GCP: Google Cloud Platform offers a powerful and scalable infrastructure for building data lakes.

- Google Cloud Storage: A highly durable and scalable object storage service for storing any type of data.

- BigQuery: A serverless, highly scalable, and cost-effective multicloud data warehouse for analytics.

- Dataproc: A managed Hadoop and Spark service for running big data workloads.

- Cloud Dataflow: A fully managed service for batch and stream data processing.

- Licensing: Pay-as-you-go pricing based on usage.

On-Premise

Hadoop: The original open-source framework for distributed storage and processing of large datasets.

- HDFS: The Hadoop Distributed File System for storing large volumes of data across a cluster of machines.

- Hive: A data warehouse infrastructure built on top of Hadoop for providing data summarization, query, and analysis.

- Spark: A fast and general-purpose cluster computing system for big data processing.

- YARN: Yet Another Resource Negotiator for managing resources and scheduling applications in a Hadoop cluster.

- Licensing: Open-source, free to use.

MinIO: A high-performance object storage server compatible with Amazon S3.

- Features: Provides high availability, scalability, and compatibility with S3 APIs.

- Licensing: Offers both a free community edition and a commercially licensed enterprise edition with additional features and support.

Delta Lake: An open-source storage layer that brings ACID transactions to data lakes.

- Features: Provides reliability, performance, and data quality for data lakes.

- Integrations: Works with Apache Spark and other data processing engines.

- Licensing: Open-source, free to use.

Debunking Misconceptions

A common misconception is that any system with multiple databases qualifies as a data lake. This is incorrect. A true data lake goes beyond simply storing data; it encompasses data governance, metadata management, and often utilizes domain-specific organization for better management.

Another misconception is that data lakes are only for "big data." While they excel at handling large volumes, data lakes can be valuable for organizations of any size. The key is to focus on the core principles and the benefits they bring in terms of data organization and accessibility.

Final Thoughts

The data lake has undoubtedly revolutionized the way organizations manage and analyze data. By providing a centralized, scalable, and flexible repository for diverse data types, data lakes empower businesses to unlock valuable insights, drive innovation, and gain a competitive advantage. However, it's crucial to recognize that a data lake is not a one-size-fits-all solution.

When is a data lake the right choice?

In my opinion, a data lake is most valuable when:

- You have a high volume and variety of data: If your organization deals with large amounts of structured, semi-structured, and unstructured data from various sources, a data lake provides the scalability and flexibility to handle it effectively.

- You need to support diverse use cases: Data lakes can support a wide range of use cases, from data exploration and analytics to machine learning and AI. If your organization needs a platform that can cater to different data needs, a data lake is an excellent choice.

- You require flexibility and agility: Data lakes allow you to store data in its raw format and define schemas later (schema-on-read). This provides flexibility and agility, especially when dealing with evolving data requirements.

- You have a strong data engineering team: Implementing and managing a data lake requires expertise in data engineering, data governance, and security. Ensure you have the right team in place to build and maintain a successful data lake.

Proceed with caution if:

- You primarily need transactional capabilities: If your primary need is to support transactional workloads with strict ACID properties, a traditional relational database might be a better fit. While frameworks like Delta Lake provide ACID capabilities on top of data lakes, they may add complexity.

- You have limited data engineering resources: Building and maintaining a data lake requires significant data engineering expertise. If your organization lacks these resources, consider alternative solutions or cloud-based managed services.

By carefully considering these factors, organizations can make informed decisions about whether a data lake is the right solution for their specific needs and ensure they maximize the value of their data assets.