Based on the experience I had with designing an information feed system that was supposed to prepare a data product from the domain model of the main application, this article provides a deep dive into the world of real-time data processing and stream analytics. We will explore how these intertwined disciplines empower organizations to extract immediate insights from high-velocity data streams, enabling timely action and data-driven decisions. The article dissects the fundamental concepts, architectural components, and common challenges, offering a comprehensive overview for experienced data professionals.

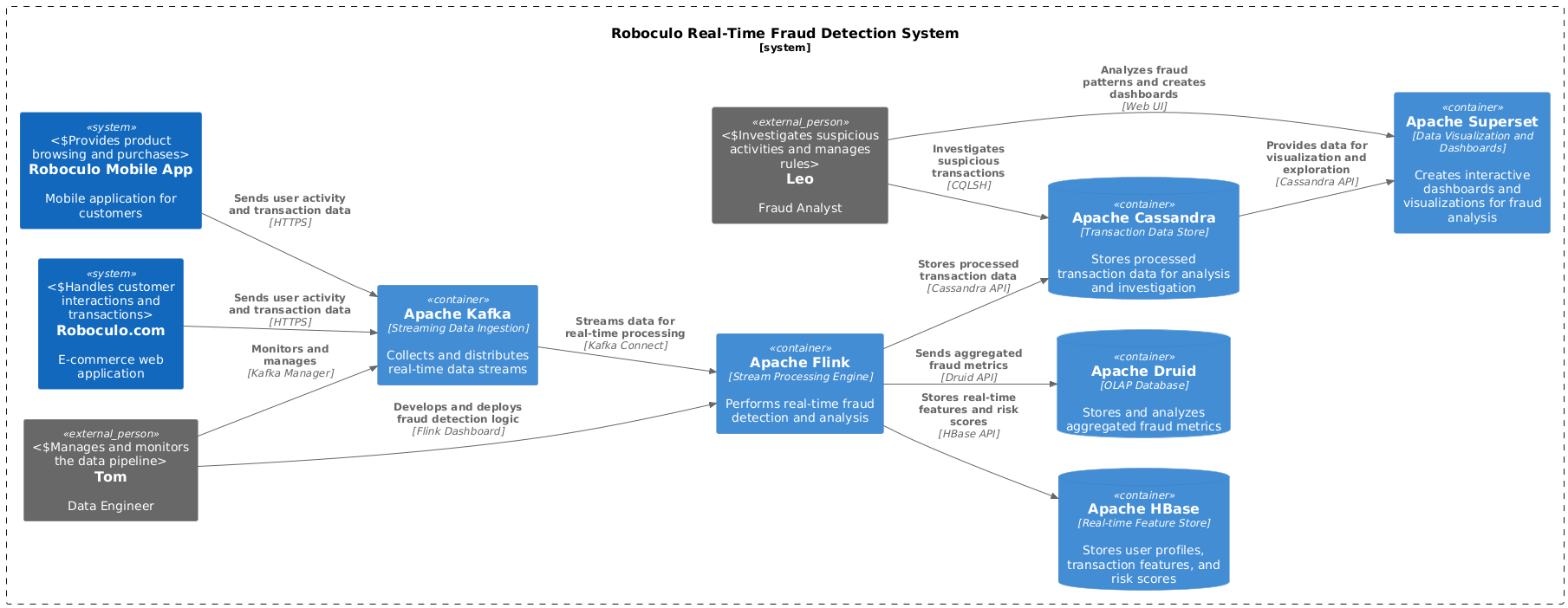

Not to generalise the topic, at the same time not to disclose sensitive information, I will write about an example which would be easy to identify with - fraud detection system.

The Rise of Real-Time

Traditional batch processing, with its inherent latency, is increasingly inadequate for applications demanding immediate insights. My experience building a fraud detection system for a high-volume e-commerce platform underscored this reality. To effectively identify and prevent fraudulent transactions, we needed to analyze user behavior, payment patterns, and various risk factors in real-time.

This shift towards real-time is fueled by:

- Increased data velocity: The proliferation of IoT devices, social media, and online transactions generates a constant deluge of data.

- Demand for instant insights: Businesses need to react swiftly to changing trends, customer behavior, and operational anomalies.

- Advanced analytics: Real-time processing enables sophisticated analytics, such as anomaly detection, predictive modeling, and machine learning, to be applied to live data streams.

Core Concepts and Architecture

Real-time data processing involves a pipeline of interconnected components working in concert to ingest, process, and analyze data streams with minimal latency. Key architectural elements include:

Data Sources: These can range from sensors and mobile devices to web applications and databases, all generating a continuous flow of data. In the fraud detection system, our data sources included user activity logs, payment gateways, and third-party risk scoring services.

Message Brokers: Acting as a buffer, message brokers like Kafka, RabbitMQ, or Pulsar provide a reliable and scalable platform for ingesting and distributing streaming data. They decouple data producers from consumers, ensuring fault tolerance and high availability. We opted for Kafka due to its high throughput and robust fault tolerance.

Stream Processing Engine: This is the heart of the pipeline, responsible for performing transformations, aggregations, and computations on the incoming data stream. Popular choices include Apache Flink, Apache Spark Streaming, and Apache Storm. For our fraud detection system, we leveraged Flink's ability to handle high-velocity streams and perform complex event processing.

Data Sink: Processed data is typically stored in a data store optimized for real-time access, such as a time-series database (InfluxDB, Prometheus), a NoSQL database (Cassandra, MongoDB), or a data warehouse (Snowflake, BigQuery). We utilized Cassandra to store processed transaction data for real-time querying and historical analysis.

Analytics and Visualization: Real-time dashboards and visualization tools enable users to monitor key metrics, identify trends, and gain immediate insights from the processed data. We built a custom dashboard to visualize fraud patterns, track system performance, and alert security personnel of suspicious activities.

Stream Processing Paradigms: Navigating the Continuum

The world of stream processing offers a spectrum of approaches, each with its own trade-offs in terms of latency, complexity, and resource utilization. While the fundamental goal remains the same – to process data continuously with minimal delay – the strategies employed can vary significantly. Let's delve deeper into the prominent paradigms:

a) Micro-batching: The Illusion of Real-Time

Micro-batching, as the name suggests, involves dividing the continuous data stream into small, manageable batches and processing them at regular intervals. This approach leverages the mature ecosystem of batch processing frameworks, making it relatively easier to implement and manage. However, this convenience comes at the cost of introducing latency. The size of the micro-batches and the processing interval determine the overall delay in obtaining results.

Spark Streaming, a popular component of the Apache Spark ecosystem, exemplifies this paradigm. It extends Spark's batch processing capabilities to handle streaming data by treating the stream as a sequence of small batches. While this approach simplifies development and leverages Spark's rich functionalities, it inherently introduces latency due to the batching process.

b) True Streaming: Embracing the Continuous Flow

In contrast to micro-batching, true streaming operates on individual events as they arrive, striving to minimize latency and deliver real-time insights. This paradigm requires a different architectural approach and specialized processing engines capable of handling the continuous influx of data.

Apache Flink stands out as a leading example of a true streaming engine. It employs a sophisticated architecture designed to process events with minimal delay, offering features like event-time processing, state management, and windowing operations. Flink's ability to handle complex event processing and maintain state across events makes it well-suited for applications demanding low latency and intricate analysis.

c) Hybrid Approaches: Bridging the Gap

Recognizing the strengths and weaknesses of each paradigm, some systems adopt a hybrid approach, combining elements of both micro-batching and true streaming. This allows them to leverage the simplicity of micro-batching for certain tasks while utilizing true streaming for others, depending on the specific requirements.

For instance, a system might use micro-batching for tasks that are less time-sensitive, such as generating aggregate reports, while employing true streaming for tasks requiring immediate action, such as fraud detection or anomaly detection.

Choosing the Right Paradigm: A Contextual Decision

The choice of stream processing paradigm is not a one-size-fits-all proposition. It depends on a variety of factors, including:

Latency requirements: How quickly do you need to process and react to incoming data?

Data volume and velocity: How much data are you dealing with, and how fast is it arriving?

Complexity of processing logic: What kind of transformations and analysis do you need to perform?

Resource constraints: What are your computational and storage limitations?

Development expertise: What skills and experience does your team possess?

By carefully considering these factors, you can choose the most appropriate paradigm for your specific needs, ensuring that your stream processing system delivers the desired performance and insights.

Windowing and State Management

Two crucial concepts in stream processing are:

Windowing: Since data streams are unbounded, windowing techniques divide them into finite segments for analysis. Common windowing strategies include tumbling windows (fixed-size, non-overlapping), sliding windows (overlapping), and session windows (based on activity gaps). We utilized sliding windows to analyze user behavior over a specific time frame while considering recent activities.

State Management: Many stream processing operations require maintaining state across events. For example, calculating running aggregates or detecting patterns requires remembering previous values. Stream processing engines provide mechanisms for efficiently managing and updating state. Flink's state management capabilities were instrumental in tracking user activity patterns and identifying anomalies.

Challenges and Considerations: Navigating the Real-Time Labyrinth

While real-time data processing offers immense potential, it also presents a unique set of challenges that demand careful consideration and meticulous planning. These challenges span various aspects of the system, from data quality and fault tolerance to scalability and security. Let's explore some of the critical considerations:

a) Data Quality: The Foundation of Reliable Insights

In the world of real-time data processing, where decisions are made based on immediate insights, data quality is paramount. Ensuring the accuracy, completeness, and consistency of high-velocity data streams is crucial to avoid erroneous conclusions and misguided actions. This involves implementing robust data validation and cleansing mechanisms at various stages of the pipeline, from ingestion to processing and storage.

Consider a financial trading system that relies on real-time market data. Even a minor error in the data feed could lead to significant financial losses. Therefore, implementing data quality checks, such as schema validation, outlier detection, and data reconciliation, becomes essential to maintain the integrity of the system.

b) Fault Tolerance: Ensuring Resilience in the Face of Failures

Real-time systems must be designed to withstand failures and maintain operational continuity. This requires incorporating fault tolerance mechanisms at every level of the architecture. Message brokers should be configured for high availability, with data replication and failover capabilities. Stream processing engines should provide checkpointing and recovery mechanisms to ensure that processing can resume seamlessly in case of disruptions.

Imagine a real-time traffic monitoring system that guides autonomous vehicles. A failure in the system could have disastrous consequences. Therefore, implementing redundant components, backup systems, and failover strategies is crucial to ensure the system's resilience and prevent disruptions.

c) Scalability: Adapting to the Ever-Growing Data Deluge

As data volumes continue to surge, real-time systems must be able to scale seamlessly to accommodate the increasing load. This requires careful capacity planning and the adoption of scalable architectures that can handle growing data velocity and user demand. Horizontal scalability, where additional processing nodes can be added to the system as needed, is often preferred for its flexibility and cost-effectiveness.

Consider a social media platform that processes millions of user interactions per second. The system must be able to scale dynamically to handle peak usage during events or trending topics. This requires a scalable architecture that can distribute the load across multiple nodes and adapt to fluctuating demand.

d) Latency: The Quest for Immediacy

Minimizing latency is a primary objective in real-time data processing. Every component of the pipeline, from data ingestion to processing and analysis, must be optimized to reduce delays and deliver timely insights. This often involves careful selection of technologies, efficient data structures, and optimized algorithms.

In a fraud detection system, every millisecond counts. The faster the system can identify and flag suspicious transactions, the greater the chance of preventing financial losses. Therefore, optimizing the pipeline for low latency becomes crucial to ensure the system's effectiveness.

e) Complexity: Managing the Intricacies of Real-Time Systems

Designing, developing, and operating real-time data processing systems can be complex and demanding. It requires specialized expertise in distributed systems, stream processing frameworks, and data management techniques. Managing the intricate interactions between various components, ensuring data consistency, and troubleshooting issues in a dynamic environment can be challenging.

Building a real-time recommendation engine for an e-commerce platform involves complex algorithms, data transformations, and user profiling. This requires a skilled team with expertise in machine learning, stream processing, and data engineering to ensure the system's accuracy and performance.

f) Security: Safeguarding Sensitive Data Streams

Real-time data often includes sensitive information, such as personal details, financial transactions, or confidential business data. Protecting this data from unauthorized access and breaches is crucial. This requires implementing robust security measures throughout the pipeline, including encryption, authentication, authorization, and auditing.

Consider a healthcare system that processes real-time patient data. Ensuring the confidentiality and integrity of this data is paramount. Implementing strong security protocols, such as data encryption, access control, and audit trails, becomes essential to comply with regulations and protect patient privacy.

By proactively addressing these challenges and adopting a comprehensive approach to system design and implementation, organizations can harness the power of real-time data processing to gain valuable insights, automate decision-making, and achieve a competitive advantage.

Technology Landscape

The ecosystem of tools and technologies for real-time data processing is constantly evolving. Here are some popular choices:

- Apache Kafka: A distributed streaming platform renowned for its high throughput, scalability, and fault tolerance.

- Apache Flink: A powerful stream processing engine with excellent state management and support for both batch and stream processing.

- Apache Spark Streaming: A micro-batching framework built on top of the Spark ecosystem, offering a wide range of data processing capabilities.

- Amazon Kinesis: A managed service from AWS for real-time data streaming, offering various features for data ingestion, processing, and analysis.

- Google Cloud Dataflow: A fully managed service from Google Cloud for batch and stream data processing, based on the Apache Beam programming model.

Best Practices: Architecting for Success in the Real-Time Realm

Building and operating real-time data processing systems is a multifaceted endeavor, requiring a careful blend of technical expertise, architectural foresight, and operational diligence. To navigate this complex landscape and ensure the success of your real-time initiatives, consider these best practices:

a) Schema Design: The Blueprint for Data Integrity

A well-defined schema is the bedrock of any data processing system, and real-time systems are no exception. A clear and consistent schema ensures interoperability between different components of the pipeline, facilitates data validation, and promotes data quality. When designing schemas for real-time data streams, consider the following:

Choose the right data format: Select a data format that is efficient, scalable, and suitable for your streaming needs. Popular choices include JSON, Avro, and Protobuf. Avro, with its schema evolution capabilities, is particularly well-suited for evolving data streams.

Embrace schema evolution: Real-world data streams rarely remain static. Design your schemas with evolution in mind, allowing for the addition or modification of fields without disrupting the entire pipeline.

Enforce schema validation: Implement schema validation at various points in the pipeline to ensure that incoming data conforms to the defined structure. This helps identify and handle erroneous data early on, preventing downstream issues.

b) Data Validation: Guarding Against Erroneous Information

In the high-velocity world of real-time data processing, erroneous data can quickly propagate through the system, leading to inaccurate insights and flawed decisions. Implementing robust data validation rules is crucial to identify and handle such data anomalies. Consider these strategies:

Define validation rules: Establish clear rules for data quality, such as range checks, data type validation, and consistency checks.

Implement validation mechanisms: Integrate data validation tools or custom scripts within your pipeline to automatically check incoming data against the defined rules.

Handle invalid data: Determine how to handle invalid data. Options include discarding the data, quarantining it for further investigation, or attempting to correct it based on predefined rules.

c) Monitoring and Alerting: Keeping a Watchful Eye on the Pipeline

Real-time systems demand constant vigilance. Continuous monitoring of key metrics, such as data throughput, latency, error rates, and resource utilization, is crucial to ensure the system's health and performance. Establish a comprehensive monitoring strategy that includes:

Defining key metrics: Identify the critical metrics that reflect the system's performance and health.

Implementing monitoring tools: Utilize monitoring tools, such as Prometheus, Grafana, or Datadog, to collect, visualize, and analyze these metrics.

Setting up alerts: Configure alerts to notify you of critical events, such as performance degradation, resource exhaustion, or data anomalies. This allows for proactive intervention and prevents minor issues from escalating into major problems.

d) Backpressure Handling: Gracefully Managing Overload

Real-time systems can encounter situations where the incoming data rate exceeds the processing capacity. This can lead to data loss, increased latency, or even system crashes. Implementing effective backpressure handling mechanisms is crucial to gracefully manage such overload scenarios. Consider these techniques:

Rate limiting: Control the rate at which data is ingested into the system to prevent overwhelming the processing capacity.

Load shedding: Selectively discard less critical data during periods of high load to maintain the system's stability.

Buffering: Utilize message queues or buffers to temporarily store incoming data when the processing capacity is exceeded.

Scaling up: Dynamically increase the processing capacity by adding more resources to handle the increased load.

e) Security: Shielding Sensitive Data from Threats

Real-time data often contains sensitive information that requires protection from unauthorized access and breaches. Implementing a robust security framework is essential to safeguard the integrity and confidentiality of your data streams. Key security considerations include:

Encryption: Encrypt data both in transit and at rest to protect it from unauthorized access.

Authentication and authorization: Implement authentication mechanisms to verify the identity of users and services accessing the system. Utilize authorization policies to control access to sensitive data based on roles and permissions.

Network security: Secure your network infrastructure using firewalls, intrusion detection systems, and other security measures to prevent unauthorized access.

Data masking and anonymization: Consider masking or anonymizing sensitive data elements to protect privacy while still enabling data analysis.

Regular security audits: Conduct periodic security audits to identify vulnerabilities and ensure compliance with security1 policies and regulations.

By adhering to these best practices, you can build and operate robust, scalable, and secure real-time data processing systems that deliver valuable insights and drive informed decision-making. Remember that real-time systems are dynamic and evolving, requiring continuous monitoring, optimization, and adaptation to meet the ever-changing demands of the data-driven world.

Final Thoughts

Real-time data processing and stream analytics are transforming the way organizations leverage data. By embracing these technologies and adhering to best practices, businesses can unlock valuable insights, automate decision-making, and gain a competitive edge in today's dynamic environment. However, it's important to recognize that building and maintaining real-time systems requires careful planning, skilled engineers, and a commitment to continuous improvement.