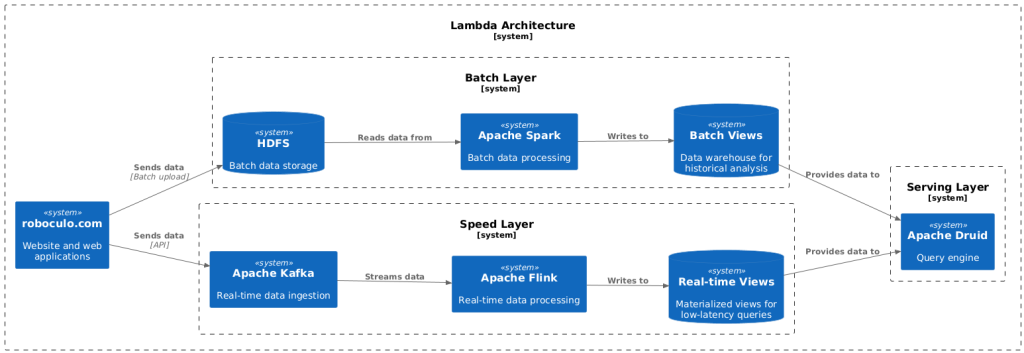

Lambda Architecture

Lambda Architecture is a data processing framework designed to handle massive amounts of data by combining both batch and real-time processing. It is particularly suited for scenarios where both historical accuracy and real-time insights are essential.

Key Components of Lambda Architecture

1. Batch Layer

- Processes large volumes of historical data.

- Provides the foundation for accurate, comprehensive results.

Example tools: Hadoop, Apache Spark, or cloud-based batch systems like AWS EMR.

2. Speed Layer

- Handles real-time data streams for low-latency processing.

- Provides fast, approximate results to address immediate data needs.

Example tools: Apache Storm, Spark Streaming, or Kafka Streams.

3. Serving Layer

- Merges outputs from both the batch and speed layers.

- Provides queryable views for applications and analytics.

Example tools: Cassandra, Elasticsearch, or HBase.

How Lambda Architecture Works

1. Incoming data is sent to both the batch layer and speed layer simultaneously.

2. The batch layer processes the data in bulk and generates historical views.

3. The speed layer processes the same data in real time, delivering approximate results quickly.

4. The serving layer combines results from both layers to provide accurate and low-latency insights.

Advantages of Lambda Architecture

Scalability: Can handle massive amounts of data efficiently.

Fault Tolerance: The dual-layer approach ensures data reliability and accuracy.

Versatility: Supports both real-time and batch processing needs.

Challenges of Lambda Architecture

Complexity: Maintaining separate batch and speed layers requires significant effort.

Latency: The batch layer introduces delays since it processes data in bulk.

Cost: Running and maintaining two pipelines can be resource-intensive.

Some of Use Cases for Lambda Architecture

- Applications requiring historical accuracy and real-time updates.

- Fraud detection systems where real-time insights are combined with historical data.

- Recommendation engines that need to balance recent user behavior with long-term trends.

- IoT applications that process sensor data in real time while storing detailed history.

Summary of Lambda Architecture

By combining the strengths of both real-time and batch processing, Lambda Architecture remains a powerful solution for organizations dealing with large-scale data systems.

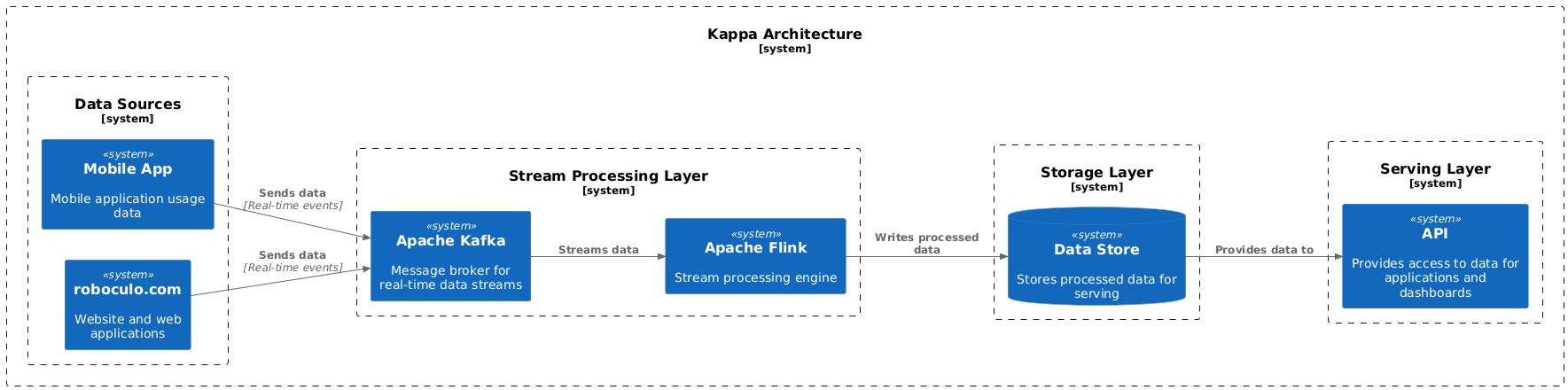

Kappa Architecture

Kappa Architecture is a simplified approach to real-time data processing, designed to address the complexity of Lambda Architecture by unifying data processing into a single pipeline. It is particularly suited for systems where real-time streaming is the priority, and batch processing can be minimized or omitted.

Key Components of Kappa Architecture

1. Stream Processing Pipeline

- Data is processed as a continuous stream.

- All data, whether real-time or historical, is handled through the same pipeline.

Example tools: Apache Kafka, Apache Flink, or Spark Streaming.

2. Storage Layer

- Stores raw and processed data, enabling reprocessing if needed.

- Storage systems often support replaying historical data streams.

Example tools: Apache Kafka (log storage), Amazon Kinesis, or cloud storage systems.

3. Serving Layer

- Stores the results from stream processing.

- Provides a queryable interface for applications and analytics.

Example tools: Cassandra, Elasticsearch, or BigQuery.

How Kappa Architecture Works

1. Incoming data is ingested as a real-time stream.

2. The stream processing engine processes the data in real time.

3. Processed data is stored in the storage layer for querying and downstream use.

4. If needed, historical data can be reprocessed by replaying the stored stream.

Advantages of Kappa Architecture

Simplicity: Eliminates the need for separate batch and speed layers, reducing system complexity.

Real-Time Processing: Designed for low-latency, real-time data streams.

Reprocessing Capability: Historical data can be replayed and reprocessed without additional batch systems.

Cost-Efficiency: Maintains a single pipeline, reducing operational overhead.

Challenges of Kappa Architecture

Resource Intensive: Continuous stream processing can be resource-intensive.

Limited Batch Support: Not ideal for workloads where batch processing is critical.

Event Ordering: Handling events in the correct sequence can be challenging in some scenarios.

Some of Use Cases for Kappa Architecture

- Real-time analytics and monitoring systems.

- IoT applications processing continuous streams of sensor data.

- Fraud detection and anomaly detection where real-time insights are crucial.

- Event-driven applications like clickstream analysis and recommendation systems.

Summary of Kappa Architecture

By streamlining data processing into a single, unified pipeline, Kappa Architecture simplifies the architecture while delivering efficient, real-time data processing capabilities. It is particularly well-suited for organizations prioritizing streaming data applications over batch processing.

Quick Comparison of Lambda and Kappa Architecture

While Lambda and Kappa Architectures both aim to process large-scale data, it's worth of comparison why we should take one over the another as they differ in approach, complexity, and use cases.

Quick Comparison

Why They Are Similar?

- Both architectures aim to process massive data volumes efficiently.

- Both can handle real-time data streams.

- Both are scalable and fault-tolerant when implemented properly.

Why Choose One Over the Other?

Choose Lambda Architecture if:

- You need both real-time and batch processing.

- Historical accuracy and completeness are critical.

- Your system must integrate legacy batch workflows.

Choose Kappa Architecture if:

- Real-time processing is the priority.

- You prefer a simpler architecture with a single pipeline.

- Batch processing is minimal or unnecessary.

- You need flexibility to reprocess historical streams

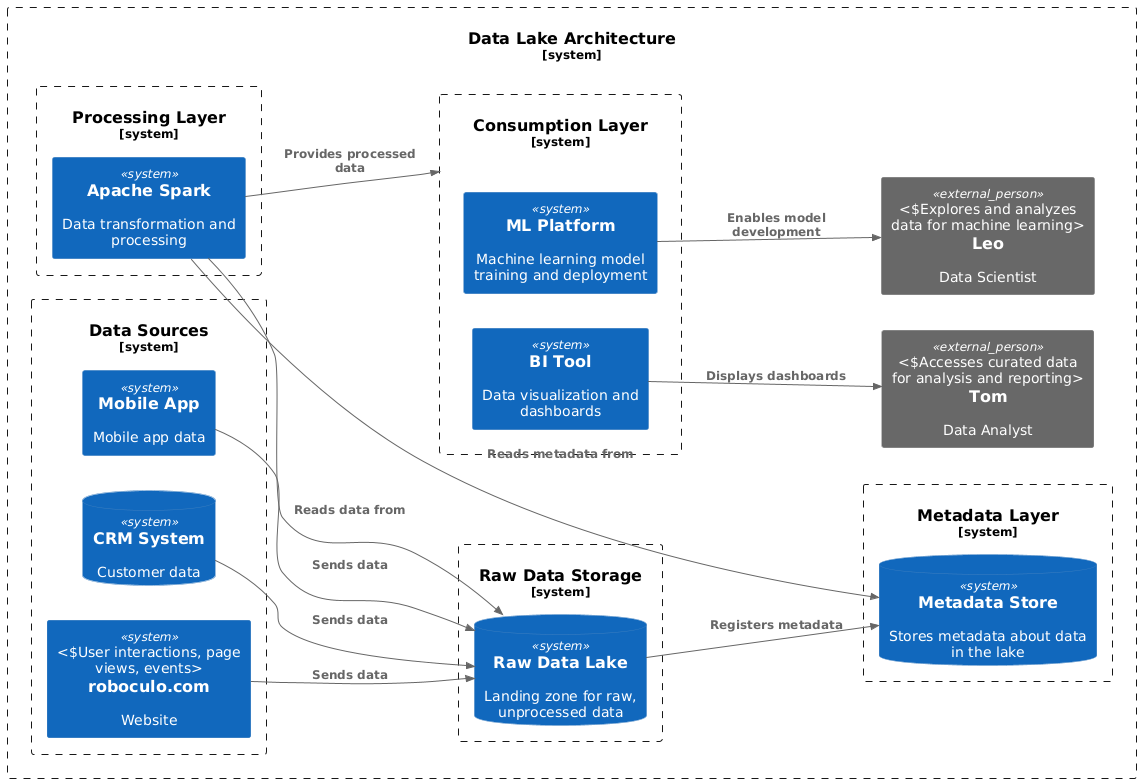

Data Lake Architecture

Data Lake Architecture is a modern approach to data storage that allows organizations to store vast amounts of structured, semi-structured, and unstructured data in its native format. This flexibility makes data lakes an essential part of big data ecosystems.

Key Components of Data Lake Architecture

1. Raw Data Storage

- Stores all data, regardless of its format or source.

- Data is kept in its native, raw form until needed.

Example tools: AWS S3, Azure Data Lake, Google Cloud Storage, or HDFS.

2. Metadata Layer

- Maintains cataloging and indexing of data to facilitate efficient retrieval.

- Enables governance and management of stored data.

Example tools: AWS Glue, Apache Atlas, or Hive Metastore.

3. Processing Layer

- Processes data as needed for analytics, machine learning, or reporting.

- Supports batch processing and real-time streaming.

Example tools: Apache Spark, Presto, Databricks, or AWS EMR.

4. Consumption Layer

- Provides data access for analytics, reporting, and machine learning.

- Enables integration with BI tools, dashboards, and external systems.

Example tools: Power BI, Tableau, Snowflake, or Redshift Spectrum.

How Data Lake Architecture Works

1. Data is ingested from multiple sources, including databases, IoT devices, and log files.

2. Raw data is stored in its native format in the storage layer.

3. Metadata catalogs enable efficient discovery and governance of data.

4. The processing layer transforms and processes the data as needed.

5. Data is made available to end-users via the consumption layer for analytics, visualization, and reporting.

Advantages of Data Lake Architecture

Scalability: Can store massive amounts of diverse data cost-effectively.

Flexibility: Supports all data types without requiring upfront schema design.

Cost-Efficiency: Cloud-based data lakes offer low storage costs.

Supports Modern Analytics: Ideal for machine learning, real-time analytics, and BI workloads.

Challenges of Data Lake Architecture

Data Governance: Managing and securing vast volumes of raw data can be challenging.

Data Quality: Without proper curation, data lakes risk becoming “data swamps.”

Performance: Query performance can be slow without optimization.

Some of Use Cases for Data Lake Architecture

- Storing large volumes of IoT sensor data for analysis.

- Centralized data repositories for machine learning model training.

- Data integration for real-time and batch analytics workflows.

- Log and clickstream analysis for business insights.

Summary of Data Lake Architecture

By offering flexible, scalable, and cost-effective storage, Data Lake Architecture has become a cornerstone for modern data platforms, enabling organizations to harness the full potential of their data.

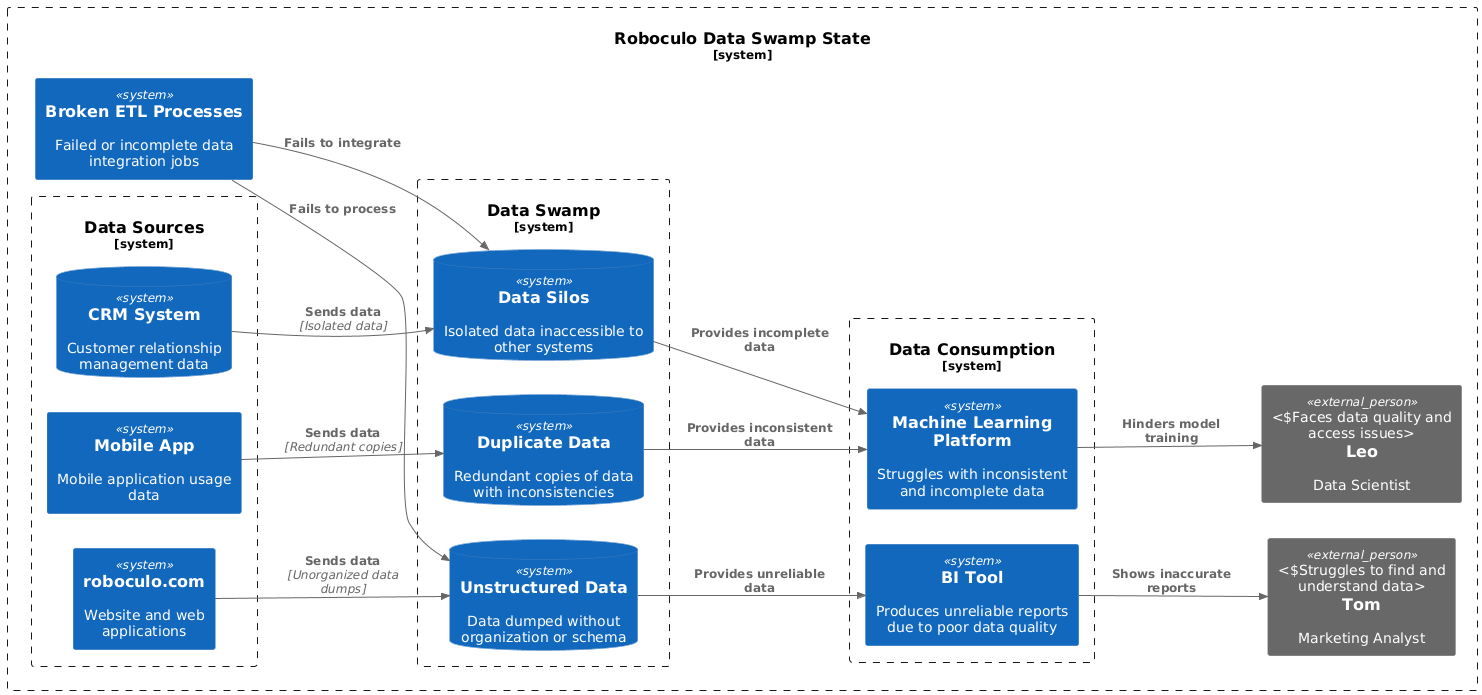

Data Swamp State

I was thinking, should I mention Data Swamp or not, but having some discussions with some clients in the past, I would like to clear out where we are when we have Data Swamp as a state of the data layer. Additionally, if our aim is not to have a Data Swamp, ho to prevent our data layer to become one..

Having Data Swamp could be a choice or just a state of the data layer which, probably, was not intentional, let me give you more information about it.

A Data Swamp refers to a poorly managed Data Lake where data is disorganized, lacking governance, and difficult to use. Over time, without proper metadata, indexing, and governance, a Data Lake can devolve into a Data Swamp, making it nearly impossible to derive value from the stored data.

In a short, a data swamp is not an architecture, but rather a deteriorated state of a data lake. It occurs when a data lake becomes unmanageable and difficult to navigate due to poor data management practices.

Comparison: Data Lake vs. Data Swamp

Preventing a Data Swamp

- Implement strong data governance policies.

- Ensure proper metadata management and indexing.

- Regularly validate and curate stored data.

- Use automated tools for data discovery, quality checks, and lineage tracking.

- Define clear processes for data ingestion and lifecycle management.

- By addressing these challenges, organizations can ensure their Data Lakes remain valuable assets instead of devolving into unusable Data Swamps.

By addressing these challenges, organizations can ensure their Data Lakes remain valuable assets instead of devolving into unusable Data Swamps.

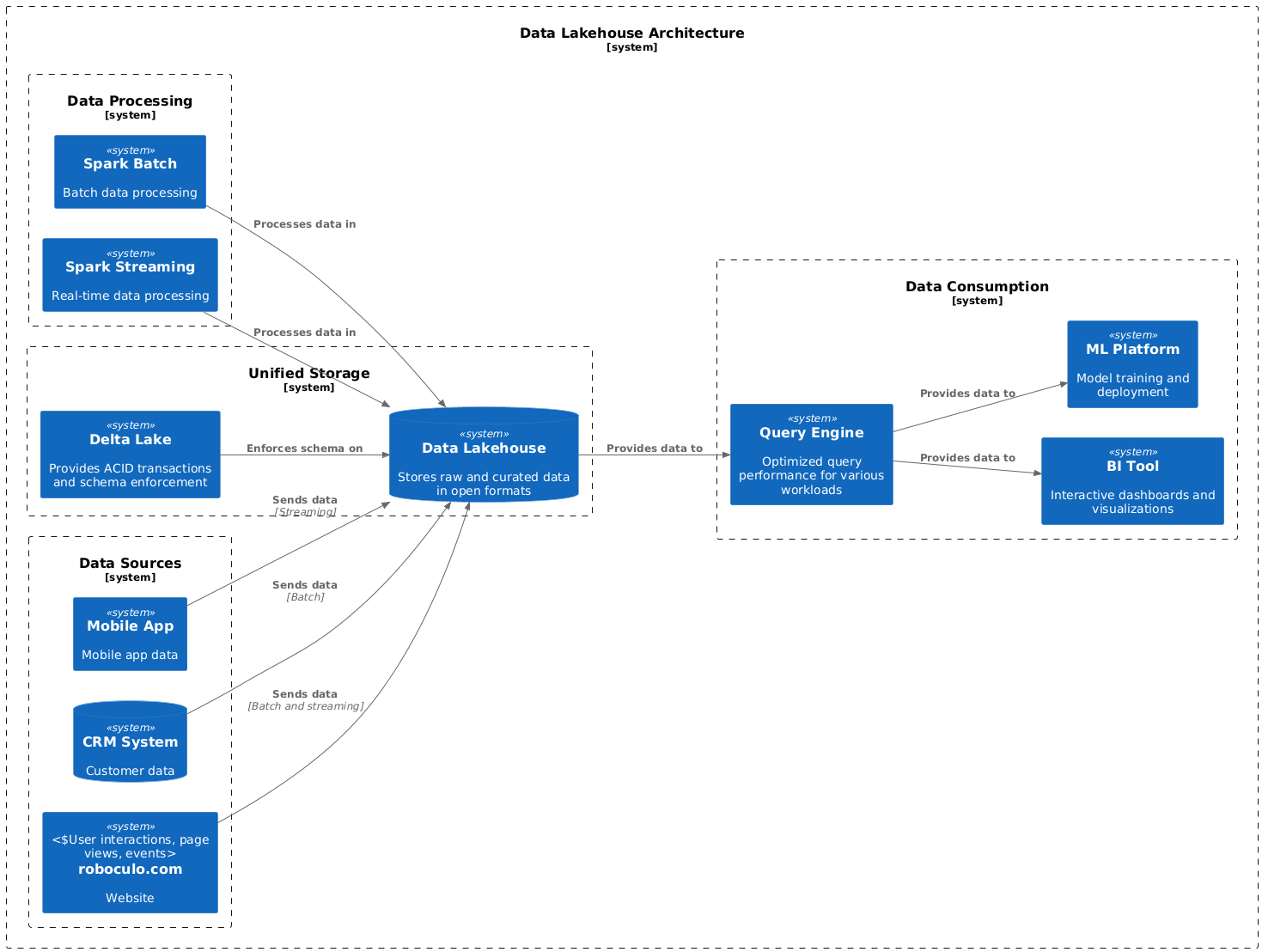

Data Lakehouse Architecture

Diving a little bit deeper into the Data Lake, it would be nice to mention one more architecture which relates to the previously mentioned one.

A Data Lakehouse combines the scalability and flexibility of a Data Lake with the performance and structure of a Data Warehouse. It addresses the limitations of Data Lake Architecture, enabling advanced analytics on structured and unstructured data in a unified system.

Key Features of Data Lakehouse Architecture

1. Unified Storage

- Stores both structured and unstructured data in the same system.

Example tools: Databricks Lakehouse, Snowflake, Google BigLake.

2. Schema Enforcement

- Supports schema validation for data consistency.

Example tools: Delta Lake, Apache Iceberg.

3. Real-Time Processing

- Enables real-time data analytics and transactions.

Example tools: Spark Streaming, Flink.

4. Optimized Query Performance

- Uses indexing and caching for faster queries.

Example tools: Presto, AWS Athena.

How Data Lakehouse Works

1. Data is ingested from diverse sources into the unified storage system.

2. Schema enforcement ensures data consistency during storage.

3. The processing layer provides analytics capabilities with optimized queries.

4. Data is made available for real-time and batch analytics in the same environment.

Comparison: Data Lake vs. Data Lakehouse

Advantages of Data Lakehouse

- Unified Analytics: Supports advanced analytics on all types of data.

- Improved Performance: Faster query execution compared to Data Lakes.

- Data Governance: Schema enforcement ensures better data quality.

Challenges of Data Lakehouse

Cost: Higher setup and infrastructure costs.

Complexity: Requires expertise to manage the unified architecture.

By combining the best features of Data Lakes and Data Warehouses, Data Lakehouses offer an integrated platform for modern data needs, balancing flexibility, scalability, and performance.

Universal Data Lakehouse

Talking about the Data Lakehouse, one more concept pops up, and that is Universal Data Lakehouse, so just a few words about it.

The Universal Data Lakehouse concept strives to be vendor and tool-neutral, as its primary goal is to integrate data across multiple organizations or platforms into a cohesive analytical environment. Unlike a standard Data Lakehouse, which is often tied to specific organizational tools or infrastructure, the Universal Data Lakehouse focuses on interoperability and collaboration across ecosystems.

However, full neutrality depends on implementation and whether organizations adopt standardized, open protocols and platforms, such as Apache Arrow or Delta Lake, to ensure seamless integration. Some vendor-specific solutions may claim universality but still encourage lock-in through proprietary enhancements.

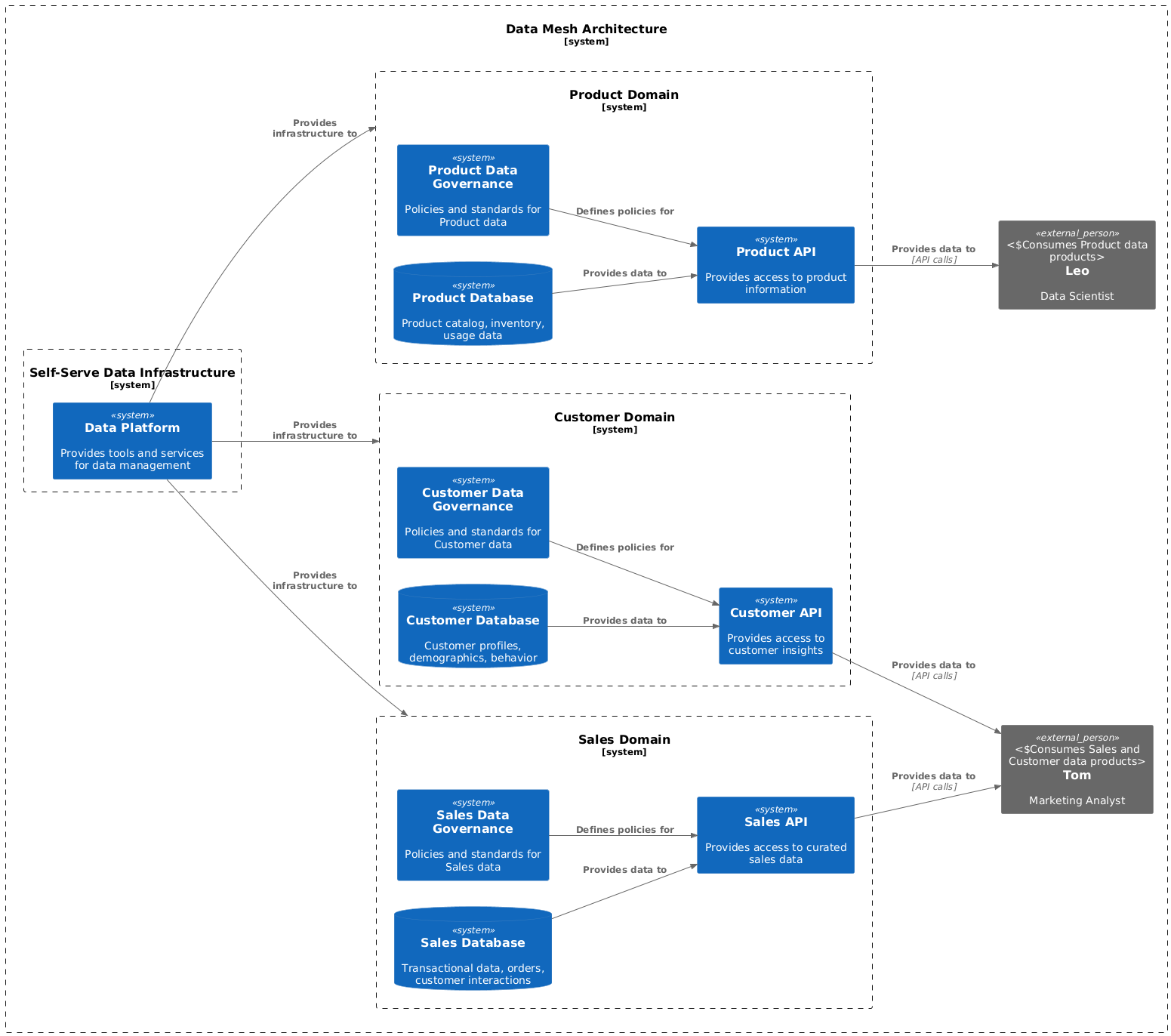

Data Mesh Architecture

Data Mesh Architecture is a decentralized approach to managing and processing data, designed to overcome the limitations of centralized data architectures like data lakes. By treating data as a product and aligning it with business domains, Data Mesh empowers teams to manage their data autonomously while ensuring interoperability across the organization.

Key Components of Data Mesh Architecture

1. Domain-Oriented Ownership

- Data is owned and managed by domain-specific teams, such as marketing, sales, or operations.

- Teams are responsible for their data pipelines, quality, and lifecycle.

2. Data as a Product

- Treats data as a valuable product with clear ownership, SLAs, and accessibility for consumers.

- Ensures high-quality, discoverable, and usable data.

3. Self-Serve Data Infrastructure

- Provides the tools and infrastructure needed to enable teams to manage their data independently.

- Often involves tools like Kubernetes, Snowflake, or cloud-native services.

4. Federated Computational Governance

- Combines decentralized ownership with global governance policies for security, compliance, and interoperability.

- Ensures consistency without compromising team autonomy.

How Data Mesh Architecture Works

1. Data is divided into domain-specific products managed by respective teams.

2. A self-serve infrastructure platform supports data ingestion, storage, and processing.

3. Global governance policies ensure security, metadata management, and data interoperability.

4. Data products are made discoverable and accessible to other teams through standardized APIs or interfaces.

Advantages of Data Mesh Architecture

Scalability: Enables decentralized data management, supporting large-scale growth.

Flexibility: Empowers teams to build and manage their own data solutions.

Improved Data Quality: Treating data as a product enforces accountability and quality.

Faster Insights: Teams can access domain-specific data without waiting for centralized teams.

Challenges of Data Mesh Architecture

Complexity: Requires strong coordination and organizational alignment.

Tooling: Demands robust infrastructure and tools to support self-serve capabilities.

Data Interoperability: Standardizing data products across domains can be challenging.

Governance: Balancing decentralization with global governance requires careful planning.

Some of Use Cases for Data Mesh Architecture

- Large enterprises with multiple business units managing vast datasets.

- Organizations seeking to scale their data platforms without bottlenecks.

- Teams requiring domain-specific analytics and insights.

- Decentralized organizations with distributed data ownership.

Summary of Data Mesh Architecture

By aligning data ownership with business domains and treating data as a product, Data Mesh Architecture enables organizations to scale efficiently while maintaining data quality, governance, and accessibility.

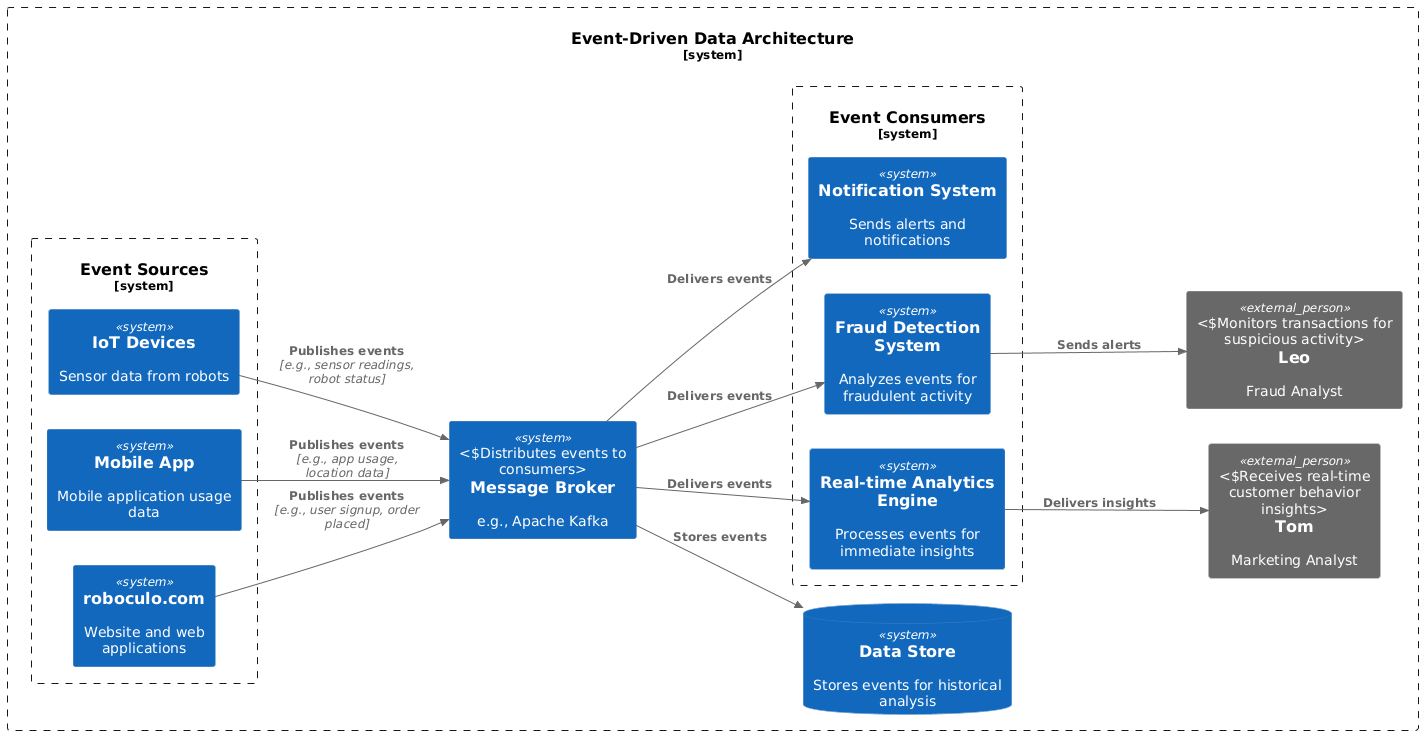

Event-Driven Architecture

Event-Driven Architecture (EDA) is a data architecture pattern that processes and reacts to events in real time. It is designed to handle high-throughput, real-time scenarios where applications or systems need to respond to changes or actions as they occur.

Key Components of Event-Driven Architecture

1. Event Producers

Systems or applications that generate events when changes occur (e.g., user actions, IoT sensor updates).

Example tools: Microservices, IoT devices, or transaction systems.

2. Event Brokers

- Intermediate systems that manage and distribute events to consumers.

- Enable decoupling of event producers and consumers.

Example tools: Apache Kafka, RabbitMQ, Amazon Kinesis, or Azure Event Hub.

3. Event Consumers

- Systems or applications that process events and trigger actions.

- Consumers can analyze, transform, or store events based on specific requirements.

Example tools: Apache Flink, Spark Streaming, or AWS Lambda.

4. Event Storage

Persistent storage of events for replay or analysis.

Example tools: Apache Kafka (log storage), cloud storage, or relational databases.

How Event-Driven Architecture Works

1. An event producer generates an event whenever a significant action or change occurs.

2. The event broker receives the event and routes it to one or more event consumers.

3. Event consumers process the event in real time and trigger appropriate workflows or actions.

4. Events may be stored for further analysis, auditing, or replay.

Advantages of Event-Driven Architecture

Real-Time Responsiveness: Enables immediate processing and actions in response to events.

Scalability: Decoupling producers and consumers allows independent scaling of systems.

Flexibility: Supports a variety of event sources and consumers, allowing for extensibility.

Fault Tolerance: Events can be replayed from storage in case of failures.

Challenges of Event-Driven Architecture

Complexity: Managing event flows and ensuring correct event ordering can be challenging.

Latency: Event propagation delays can occur in high-throughput systems.

Event Duplication: Ensuring exactly-once delivery of events requires careful design.

Monitoring: Debugging and monitoring distributed event-driven systems can be difficult.

Some of Use Cases for Event-Driven Architecture

- IoT Applications: Processing sensor data and triggering actions in real time.

- Fraud Detection: Identifying suspicious activities as they occur.

- E-Commerce: Real-time order processing, inventory updates, and customer notifications.

- Log and Metrics Analysis: Monitoring application performance and reacting to anomalies.

- Real-Time Analytics: Stream processing for dashboards and insights.

Summary of Event-Driven Architecture

By enabling real-time responsiveness and scalability, Event-Driven Architecture is ideal for modern systems requiring immediate processing and actions. It empowers organizations to build agile, scalable, and decoupled systems that can adapt to changing data in real time.

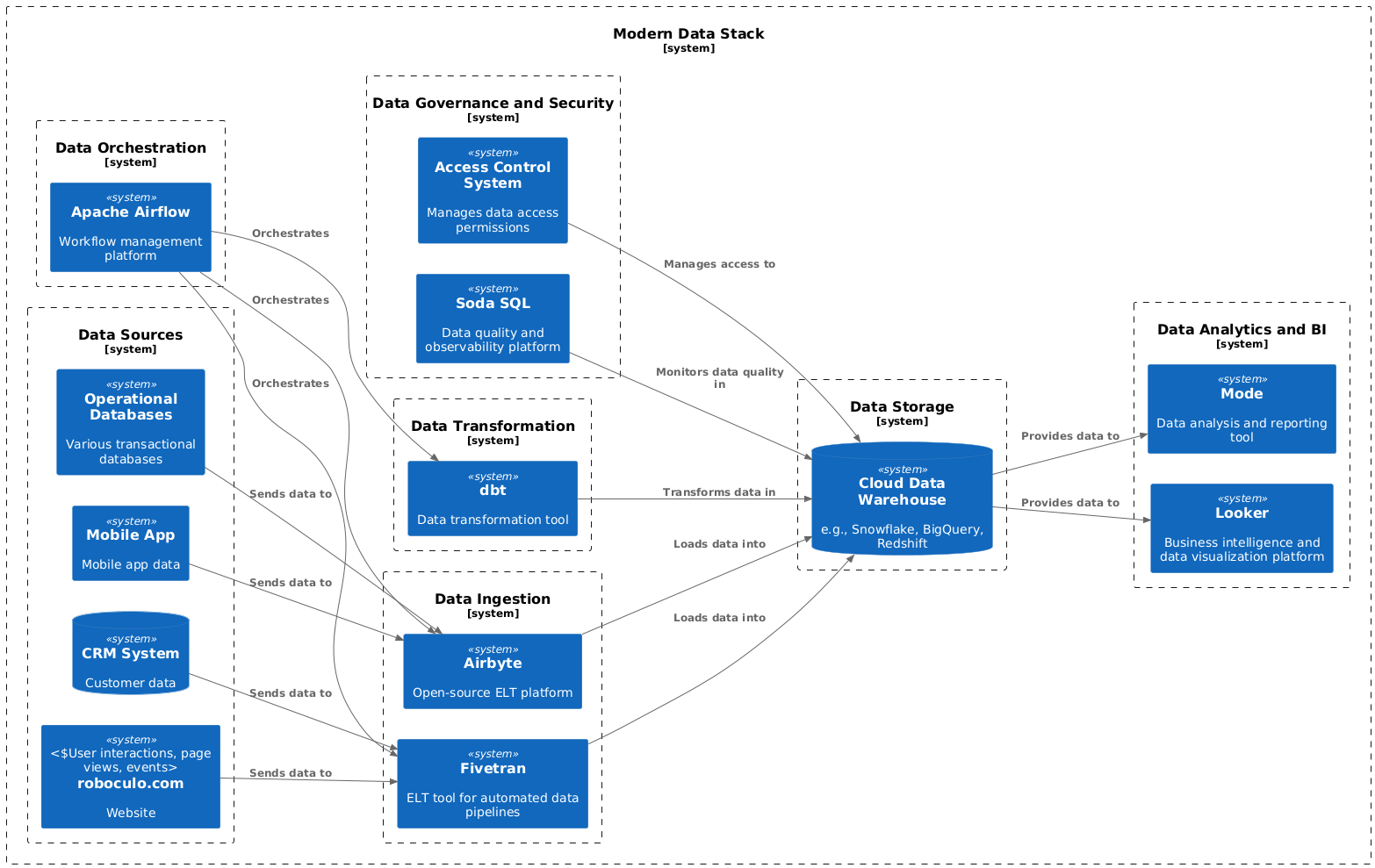

Modern Data Stack

The Modern Data Stack (MDS) is a cloud-native, modular data architecture designed to handle the entire data lifecycle, from ingestion and transformation to analytics and reporting. It leverages best-of-breed tools for scalability, automation, and cost efficiency, making it a popular choice for modern businesses.

Key Components of Modern Data Stack

1. Data Ingestion

- Tools for extracting data from various sources (databases, APIs, applications) and loading it into a centralized platform.

Example tools: Fivetran, Stitch, Airbyte.

2. Data Storage

- Cloud-based data warehouses or lakes that act as the central repository for raw and processed data.

Example tools: Snowflake, Google BigQuery, Amazon Redshift.

3. Data Transformation

- Tools that clean, model, and prepare data for analysis.

Example tools: dbt (Data Build Tool).

4. Data Orchestration

- Workflow management tools for scheduling and automating data pipelines.

Example tools: Apache Airflow, Prefect.

5. Data Analytics and BI

- Platforms for querying, visualizing, and reporting insights from data.

Example tools: Looker, Tableau, Power BI.

5. Data Governance and Security

- Tools for data cataloging, lineage tracking, and compliance management.

Example tools: Alation, Collibra, Immuta.

How Modern Data Stack Works

1. Data is ingested from multiple sources using ETL/ELT tools.

2. Raw data is stored in a cloud warehouse or lake.

3. Transformation tools clean and model the data, preparing it for analysis.

4. Orchestration tools automate and manage the data pipeline workflows.

5. Analysts and stakeholders access insights through BI tools.

6. Governance tools ensure data quality, security, and compliance.

Advantages of Modern Data Stack

Scalability: Cloud-native tools allow for seamless scaling with business growth.

Cost Efficiency: Pay-as-you-go pricing models reduce upfront infrastructure costs.

Flexibility: Modular tools enable customization and integration with existing systems.

Automation: Automated workflows reduce manual intervention and errors.

Ease of Use: Intuitive tools empower non-technical users to leverage data.

Challenges of Modern Data Stack

Tool Fragmentation: Managing multiple tools can increase complexity.

Vendor Lock-In: Heavy reliance on specific cloud vendors or platforms.

Skill Requirements: Requires expertise in cloud-based tools and data pipelines.

Latency: Real-time analytics may require additional configurations.

Some of Use Cases for Modern Data Stack

- Building centralized analytics platforms for real-time decision-making.

- Supporting agile data teams in scaling their operations.

- Driving marketing analytics with near real-time customer insights.

- Enabling machine learning pipelines with clean, reliable data.

Summary of Modern Data Stack

The Modern Data Stack has revolutionized how organizations manage and analyze data by offering a scalable, efficient, and flexible approach. Its modular design ensures businesses can adapt quickly to changing data needs while maintaining high performance and reliability.

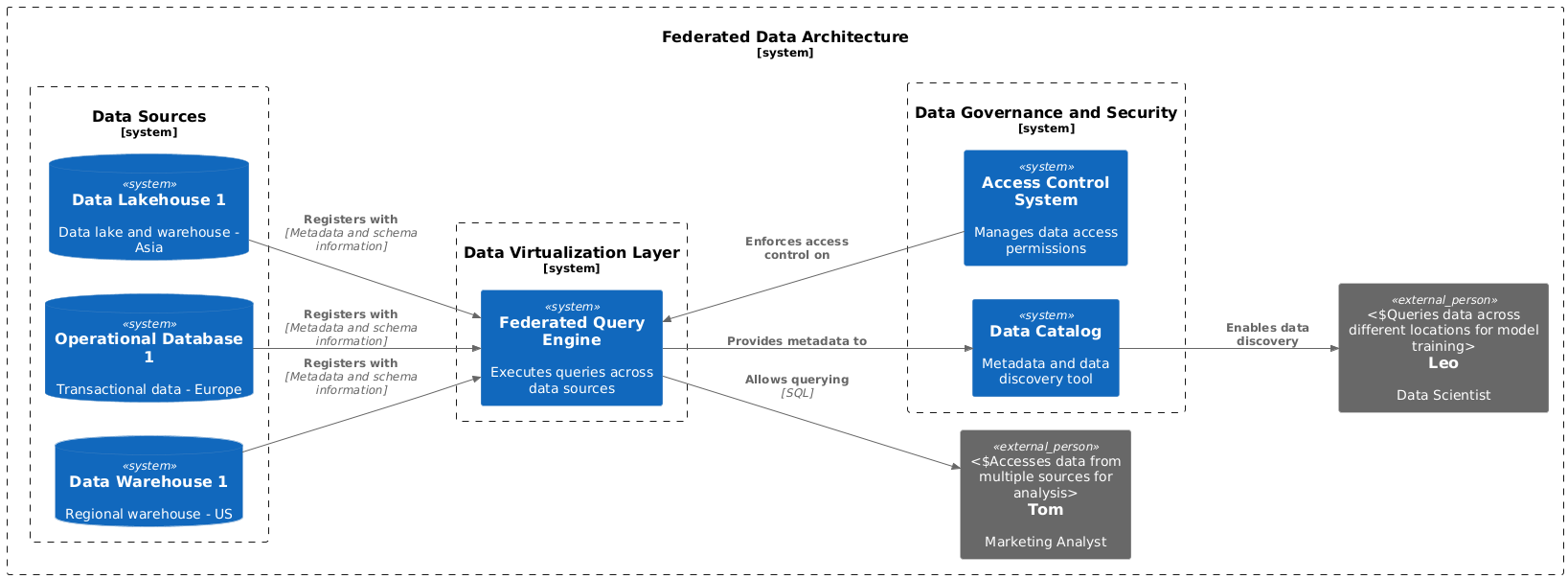

Federated Data Architecture

Data Mesh Architecture is a decentralized approach to managing and processing data, designed to overcome the limitations of centralized data architectures like data lakes. By treating data as a product and aligning it with business domains, Data Mesh empowers teams to manage their data autonomously while ensuring interoperability across the organization.

Key Components of Federated Data Architecture

1. Data Sources

- Distributed, independent systems or databases storing data across different domains or organizations.

Examples: Relational databases, NoSQL systems, data lakes, or operational databases.

2. Federated Query Engine

- Enables real-time querying across multiple data sources without physically moving the data.

Example tools: Apache Drill, Presto, Starburst, or Denodo.

3. Data Virtualization Layer

- Abstracts the complexity of distributed data sources and provides a unified interface for data access.

- Ensures users can query data as if it resides in a single system.

4. Governance and Security

- Ensures access control, data privacy, and compliance with regulations across distributed systems.

Example tools: Immuta, Privacera.

How Federated Data Architecture Works

1. Data remains in its original location and format, distributed across independent systems.

2. The data virtualization layer creates a unified logical model for the distributed data.

3. A federated query engine processes queries across the systems and retrieves results in real-time.

4. Governance tools manage access, security, and compliance, ensuring a consistent framework across all sources.

Advantages of Federated Data Architecture

No Data Movement: Eliminates the need to copy or consolidate data into a central repository.

Cost Efficiency: Reduces storage duplication and associated costs.

Scalability: Easily integrates new data sources without restructuring.

Security: Maintains data privacy by keeping it in its source system.

Challenges of Federated Data Architecture

Performance: Querying distributed systems in real time can introduce latency.

Complexity: Requires robust query engines and virtualization tools to handle heterogeneity.

Governance: Managing security and compliance across multiple systems can be challenging.

Data Quality: Ensuring consistency and reliability of data across diverse sources.

Some of Use Cases for Federated Data Architecture

- Cross-Organizational Data Sharing: Accessing data across multiple organizations without duplication.

- Hybrid Cloud Environments: Integrating on-premises and cloud-based data systems.

- Compliance and Privacy: Ensuring secure access to sensitive data while meeting regulatory requirements.

- Real-Time Analytics: Querying distributed operational systems for up-to-date insights.

Summary of Federated Data Architecture

By enabling seamless access to distributed data without consolidation, Federated Data Architecture provides organizations with a flexible, scalable, and secure solution to integrate and analyze data across silos. It is especially beneficial for hybrid environments, cross-organization collaboration, and compliance-sensitive domains.

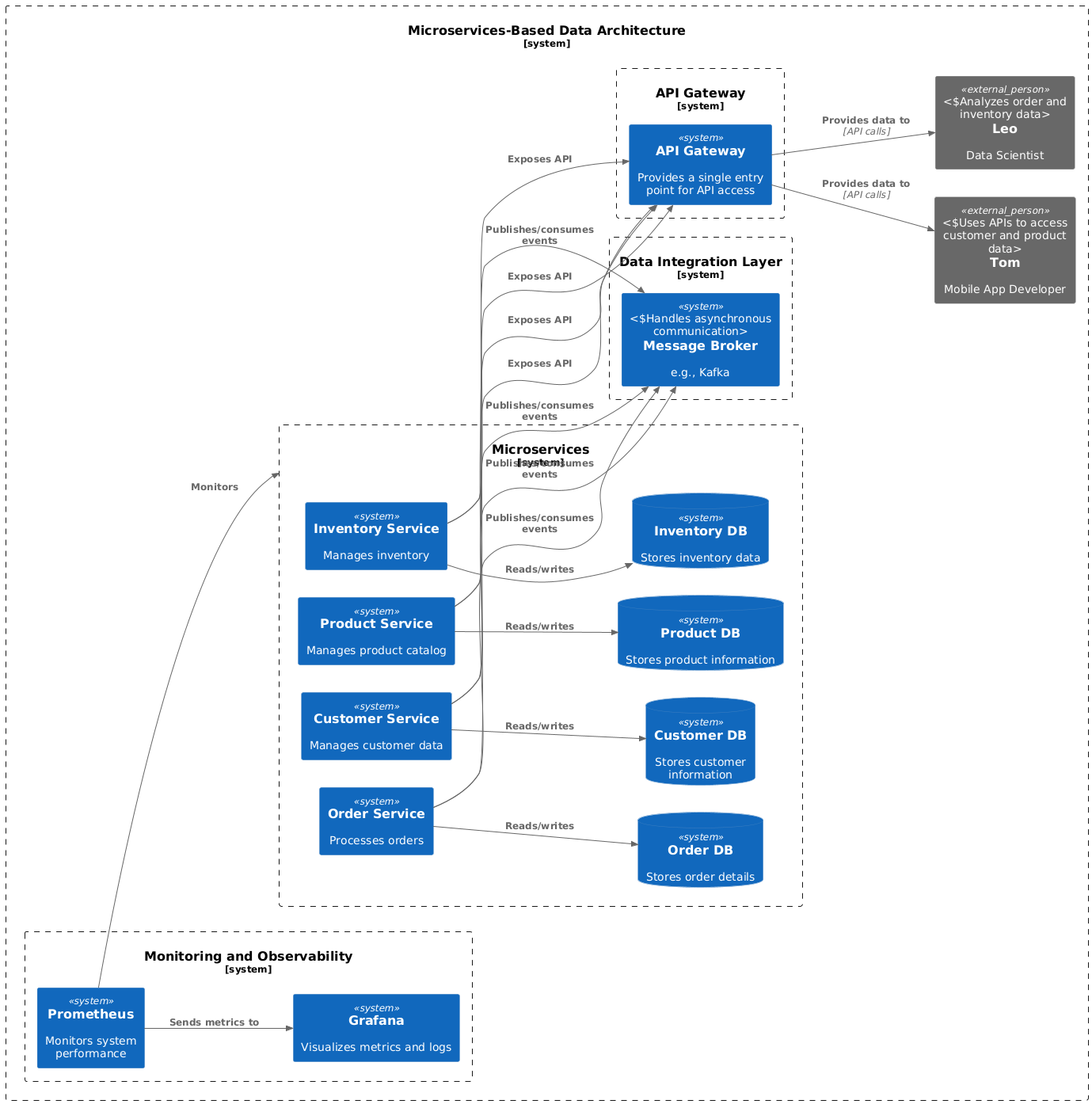

Microservices-Based Data Architecture

Microservices-Based Data Architecture is a decentralized approach to data management where each microservice has its own dedicated data store. This architecture ensures that microservices are independent, scalable, and can evolve without impacting other components of the system.

Key Components of Microservices-Based Data Architecture

1. Microservices

- Independent services that focus on specific business capabilities.

- Each service has its own database and manages its data autonomously.

Example tools: REST APIs, GraphQL, gRPC.

2. Data Stores

- Dedicated databases for each microservice, optimized for its specific requirements (e.g., relational, NoSQL, graph databases).

Example tools: PostgreSQL, MongoDB, Cassandra, Neo4j.

3. Data Integration Layer

- Manages communication and data exchange between microservices.

Example tools: Kafka, RabbitMQ, AWS SNS/SQS.

4. API Gateway

- Provides a unified entry point for external consumers to interact with microservices.

Example tools: Kong, Apigee, AWS API Gateway.

5. Monitoring and Observability

- Tracks performance, errors, and system health across distributed microservices.

Example tools: Prometheus, Grafana, ELK Stack.

How Microservices-Based Data Architecture Works

1. Each microservice operates independently, managing its own data and database.

2. Data exchanges between microservices occur through APIs or event-driven systems.

3. The architecture ensures fault isolation—issues in one service do not affect others.

4. Consumers access data or functionality through an API gateway, abstracting service complexities.

Advantages of Microservices-Based Data Architecture

Scalability: Individual microservices can scale independently based on their workloads.

Flexibility: Allows teams to choose the best database type for each microservice’s needs.

Fault Isolation: Issues in one microservice do not impact others, ensuring system stability.

Faster Development: Teams can develop and deploy microservices independently.

Challenges of Microservices-Based Data Architecture

Data Consistency: Ensuring consistency across microservices is complex, especially in distributed systems.

Operational Overhead: Managing multiple databases and services increases complexity.

Cross-Service Transactions: Requires careful design to handle distributed transactions and eventual consistency.

Monitoring: Tracking issues and performance across numerous microservices can be challenging.

Some of Use Cases for Microservices-Based Data Architecture

- E-Commerce Platforms: Managing product catalogs, customer accounts, and order processing as separate services.

- IoT Systems: Processing sensor data and device management through specialized microservices.

- Banking Applications: Handling transactions, customer data, and loan processing independently.

- Media and Entertainment: Streaming services with microservices for user management, content delivery, and recommendations.

Summary of Microservices-Based Data Architecture

By decoupling data and functionality, Microservices-Based Data Architecture provides a robust and scalable framework for modern, complex applications. It enables agility, scalability, and resilience, making it a popular choice for organizations embracing distributed systems.

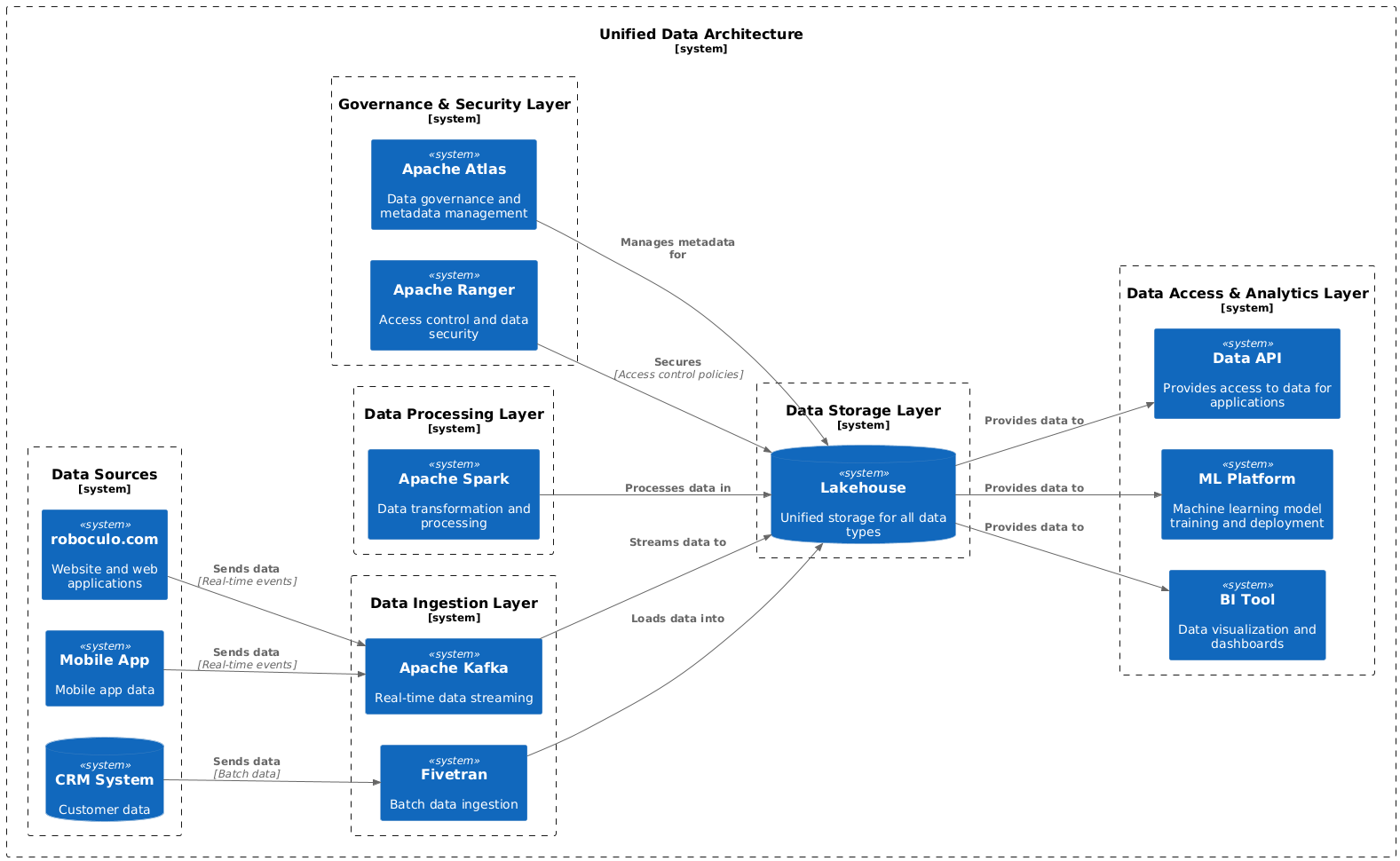

Unified Data Architecture (UDA)

Unified Data Architecture (UDA) is a holistic approach that integrates diverse data management systems such as data lakes, data warehouses, and streaming platforms into a single cohesive framework. UDA is designed to provide seamless access, processing, and analysis of data across an organization.

Key Components of Unified Data Architecture (UDA)

1. Data Ingestion Layer

- Handles the collection of data from various sources, such as databases, IoT devices, and APIs.

- Supports batch and real-time ingestion.

Example tools: Apache NiFi, AWS Glue, Kafka.

2. Data Storage Layer

- Combines data lakes for raw data, warehouses for structured analytics, and stream processing for real-time insights.

Example tools: AWS S3, Snowflake, Google BigQuery, Apache Kafka.

3. Data Processing Layer

- Enables data transformation, enrichment, and integration.

Example tools: Apache Spark, Databricks, Presto.

4. Data Access and Analytics Layer

- Provides tools for querying, visualization, and advanced analytics.

Example tools: Tableau, Looker, Power BI, AWS Athena.

5. Governance and Security Layer

- Ensures data compliance, access control, and auditing.

Example tools: Alation, Collibra, Immuta.

How Unified Data Architecture (UDA) Works

1. Data is ingested from multiple sources into the architecture using ETL/ELT tools.

2. Raw data is stored in a data lake for flexibility, while structured data is stored in a warehouse for analytics.

3. Streaming platforms enable real-time data processing for time-sensitive insights.

4. The data processing layer transforms and integrates data as required by business needs.

5. Users access data through analytics tools, benefiting from consistent governance and security.

Advantages of Unified Data Architecture (UDA)

Holistic Integration: Combines the strengths of data lakes, warehouses, and streams into one architecture.

Scalability: Easily scales with organizational data growth.

Flexibility: Supports a variety of data types and processing methods.

Enhanced Analytics: Provides real-time, batch, and advanced analytics capabilities.

Centralized Governance: Ensures consistent compliance and security across data systems.

Challenges of Unified Data Architecture (UDA)

Complexity: Managing diverse systems within one architecture requires expertise.

Cost: High infrastructure and operational costs due to multiple integrated components.

Tool Integration: Ensuring seamless interoperability between tools and platforms can be challenging.

Some of Use Cases for Unified Data Architecture (UDA)

- Enterprise Data Management: Centralized architecture for organizations handling diverse data types.

- Hybrid Analytics: Combining real-time and historical analytics for comprehensive insights.

- Regulatory Compliance: Ensuring data security and compliance across multiple systems.

- AI and ML Workflows: Streamlined pipelines for training and deploying machine learning models.

Summary of Unified Data Architecture (UDA)

By integrating diverse data systems, Unified Data Architecture bridges the gap between raw data storage, structured analytics, and real-time processing, enabling organizations to harness the full value of their data.

Author’s Final Thoughts (not one more architecture)

In the rapidly evolving field of data management, selecting the right architecture is crucial for meeting business objectives while ensuring scalability, performance, and adaptability. The architectures discussed in this article ranging from Data Lakes to Unified Data Architectures are just a few examples of the numerous options available. However, no single architecture fits every scenario.

As a data architect, I understand that the ideal architecture design depends on various factors, including budget, project timelines, data volume, complexity of requirements, the current state of data and systems, and end-user needs. Designing a data architecture often requires compromise balancing customer requirements with maintaining system quality and resilience. Flexibility is key, as a rigid solution may fail to accommodate future changes or challenges.

One of the most critical aspects of architecture design is forward-thinking. Considering future technologies, organizational plans, and industry trends is essential for creating systems that can adapt to change. For instance, merging companies, migrating to the cloud, enabling inter-cloud or vendor migration, and avoiding vendor lock-in are all scenarios that should be factored into the design process. An architecture that facilitates easier data transfer or re-design in the future is far more valuable than one optimized only for the present.

Ultimately, the goal is to create a system that not only fulfills immediate business needs but also provides a strong foundation for growth and innovation. By staying adaptable, leveraging the best practices of modern architectures, and prioritizing customer-centric solutions, we can build data systems that are both robust and future-proof.